Ahorra un 25 % (o incluso más) en tus costes de Kafka | Acepta el reto del ahorro con Kafka de Confluent

Streaming Analytics: An Introduction

Also referred to as real-time analytics or data stream analytics, streaming analytics captures, processes, and analyzes data in real-time, as it is generated, using technologies like Apache Kafka® and Apache Flink® to extract immediate business insights.

At Confluent, we enable enterprise organizations better leverage real-time data streaming, stream processing, and integration across systems to unlock the analytics & AI use cases they need to stay competitive. Learn how streaming analytics can help your teams to maximize efficiency, reduce data costs, and uncover powerful insights across both historical and real-time data.

What Is Streaming Analytics?

Streaming analytics is an approach to business analytics and business intelligence where data is captured, processed, and analyzed in real time or near-real time, as it is generated. By enabling immediate business-level insights, it enables timely, proactive, truly data-driven decision-making and activates new use cases and scenarios.

This is in contrast to traditional or batch analytics, where data is typically considered for static analysis only after it’s “at rest,” typically in a data warehouse, long after the business event that created it.

Instead, streaming analytics strives to enable analysis when data is still “in motion”, at the time of its creation or update. This means that dynamic trends, patterns, and anomalies can be detected on a more dynamic or real-time basis, driving new kinds of important decisions, automation, efficiencies, and real-time use cases.

For instance, streaming analytics equips financial institutions can detect and react to fraudulent transactions as they’re happening, allowing them to take immediate action (such as blocking a credit card exploit before it completes). A retail chain can watch changes in inventory in real-time and trigger supply chain operations to compensate, balancing just-in-time parameters such as expected demand, inventory, supply chain, and transport, or to generate unique up-sell offers to its customers. Or an airline’s operations division can analyze the real-time data stream from its fleet of aircraft to predict potential faults (anomaly detection), trigger maintenance or regulatory events, and proactively schedule and reposition equipment and crews in response.

How Streaming Analytics Works

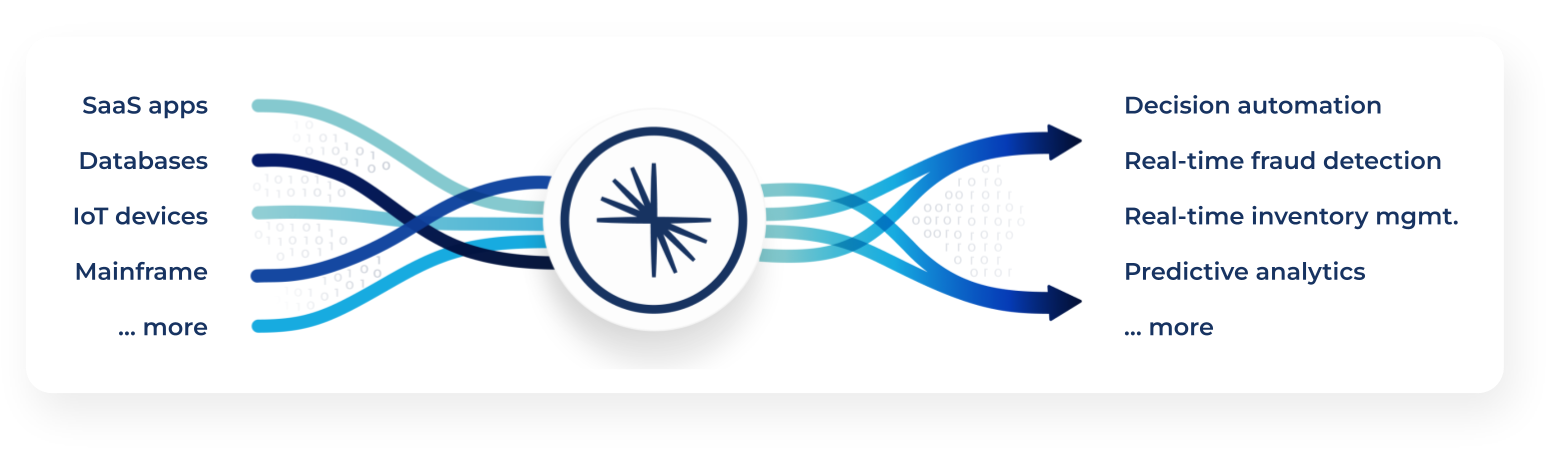

With streaming analytics, large volumes of data are continuously processed in real time. To facilitate meaningful business-level analysis, data infrastructure such as a data stream processing platform is used, which allows the ingestion and analysis of data from multiple sources in real time (such as financial transactions, IoT sensors, social media feeds, logs, clickstreams, etc).

The analysis functions may range from simpler comparisons, correlations, and joins, to more sophisticated techniques such as complex event processing (CEP) and machine learning. These functions, which effectively generate new data “products” of value, may be implemented in application code or using standard SQL with stream processing extensions.

The stream processing platform enables this analysis to drive real-time decisions or visualizations, to be routed to traditional data warehouses for further or legacy business intelligence, or to other operational data sources to drive other functions in the organization.

In this way, a stream processing platform can be seen as a centralized way to connect an organization’s data sources and sinks, with real-time value-added computation and analysis along the way.

How Stream Analytics Differs From Batch Analytics

Streaming analytics and batch-based analytics represent two related approaches to data analytics, differing in when data is available for analysis. With batch analytics, data is typically considered for what is effectively static analysis only after it’s “at rest,” typically in a data warehouse, long after the business event that created it. Streaming analytics, on the other hand, enables analysis of data when it’s still “in motion,” at the time of its creation or update.

In this regard, streaming analytics represents the evolution of analytics, from batch to streaming. An organization can introduce a stream processing platform to connect data sources and sinks, thus adding new capabilities, without disturbing existing or legacy systems used for batch analytics.

Comparing Streaming Analytics vs. Batch Analytics

| Feature | Streaming Analytics | Batching Analytics |

|---|---|---|

| When data is analyzed | As it is being generated | After it has been stored in a database |

| Typical use cases | Real-time applications | Non-real-time applications |

| Benefits | Ability to react to events in real-time | Ability to analyze large amounts of data |

| Challenges | Complex to implement | Can be slow for real-time applications |

| Reaction time | Real-time/immediate | Delayed |

| Decision-making | Forward-looking contemporaneous, and retrospective | Retrospective only |

| Analysis and decision latency | Low | Medium to high |

| Intelligence/Analytics paradigm | Both push-based, continuous intelligence systems or pull-based, on-demand analytics systems | Pull-based, on-demand analytics only |

| Storage cost | Low | High |

| Data processing | Real-time | Request-based/periodic |

| Dashboard refresh | Every second or minute | Hourly or weekly |

| Ideal for | Decision automation, process automation | Non-time sensitive use cases like payroll management, weekly/monthly billing, or low-frequency reports based on historical data |

Advantages of Streaming Analytics

Streaming analytics enables organizations to act on real-time insights, improving responsiveness, operational efficiency, and competitive advantage. This unlocks new opportunities for innovation and smarter decision-making, which are vital for fast-paced, highly competitive industries

Industries That Rely on Streaming Analytics Today Include:

- Finance: Monitor financial markets for signs of fraud or other suspicious activity.

- Retail: Analyze customer behavior and optimize inventory levels.

- Manufacturing: Monitor production lines and identify potential problems before they cause a disruption.

- Logistics: Track the movement of goods and ensure that they arrive on time.

- Healthcare: Evaluate patients' health and identify potential problems early on.

Top 5 Benefits of Adopting Streaming Analytics

Real-Time Insights, More Accurate Predictions, and Faster Decision-Making

Streaming analytics allows organizations to gain insights into data as it is being generated, which can help them make faster and better decisions, and anticipate future outcomes. For example, a streaming analytics solution could be used to track customer behavior in real-time and identify potential fraud or security threats.

Improved Operational Efficiency

Streaming analytics can help organizations automate tasks and processes, which can save time and money. For example, a streaming analytics solution could be used to automate the process of generating reports or sending alerts.

Reduced Risk

Streaming analytics can help organizations identify and respond to potential risks more quickly, which can help them avoid costly disruptions. For example, a streaming analytics solution could be used to monitor the performance of critical infrastructure and identify potential problems before they cause an outage.

Enhanced Customer Experiences

Streaming analytics can be used to personalize the customer experience by providing real-time insights into customer behavior. For example, a streaming analytics solution could be used to recommend products or services to customers based on their past purchases.

Increased Innovation

Streaming analytics can help organizations innovate faster by providing them with access to real-time data that can be used to develop new products and services. For example, a streaming analytics solution could be used to track customer sentiment in real-time and identify new opportunities for product development.

Learn How Notion Uses Streaming Analytics to Build AI-Powered Experiences for 100M+ Users

Common Challenges With Streaming Analytics

While streaming analytics comes with significant benefits, transitioning from batch to stream processing isn't without its challenges.

Managing high data volumes, ensuring low-latency processing, and integrating modern and legacy systems can become significant obstacles to success with streaming analytics. Understanding these challenges is crucial for designing effective real-time data solutions.

Here are the top 5 challenges of streaming analytics:

- Data volume: The amount of data that businesses generate is growing exponentially, and this makes it difficult to analyze all of the data in real-time.

- Data velocity: Real-time analytics requires businesses to analyze data as it is being generated, which can be difficult to do if the data is coming in at a high velocity.

- Data variety: Businesses generate data from a variety of sources, and this can make it difficult to integrate all of the data and analyze it in real-time.

- Data quality: Real-time analytics requires businesses to have high-quality data, and this can be difficult to achieve, especially if the data is coming from a variety of sources.

- Cost: Real-time analytics can be expensive to implement and maintain, and this can be a barrier for some businesses.

Despite these challenges, streaming analytics can be a valuable tool for businesses that need to make decisions in real-time. By overcoming these challenges, businesses can gain a competitive advantage by making better decisions faster.

Common Industry Use Cases for Streaming Analytics

While vertical use cases for streaming analytics can vary widely, they all share key characteristics: the need to process and act on data instantly, drive timely business decisions, and address situations where real-time visibility creates measurable value. By examining these shared patterns, you can see how streaming analytics could transform your organization, in whatever scenarios for which timely, actionable information is critical.

Here are the top use cases for streaming analytics we've seen organizations adopt and scale:

Financial Fraud Detection

Real-time analytics can be used to detect fraud in real-time, such as credit card fraud or insurance fraud. →

Real-Time & Predictive Customer Service

Real-time analytics can be used to improve customer service by providing customer support agents with the information they need to resolve issues quickly and efficiently, as well as AI insights for predictive customer support. →

Personalized Marketing

Streaming analytics can be used to personalize marketing campaigns and target customers with the most relevant offers. →

Supply Chain Management

Real-time analytics can be used to optimize supply chain management by tracking the movement of goods and ensuring that they arrive on time. →

Manufacturing

Real-time analytics can be used to improve manufacturing processes by identifying potential problems early on and taking corrective action. →

Financial services

Real-time analytics can be used to monitor financial markets for signs of fraud or other suspicious activity. →

Healthcare

Real-time analytics can be used to monitor patients' health and identify potential problems early on. →

Media and entertainment

Real-time analytics can be used to personalize content and recommendations for users. →

Internet of Things (IoT)

Real-time analytics can be used to collect and analyze data from IoT devices to gain insights into how people are using products and services. →

Self-driving cars

Real-time analytics is essential for self-driving cars to make decisions in real time about how to navigate the road safely. →

Common Technologies and Solutions

A variety of tools and platforms form the backbone of streaming analytics architectures, enabling efficient data ingestion, processing, and analysis at scale. The most common technologies used for streaming analytics include:

- Apache Kafka: Apache Kafka is an open-source stream processing platform that can be used to collect, store, and process real-time data.

- Apache Flink: Apache Flink is an open-source processing platform that can unify real-time data streams and batch processing

- Apache Spark: Apache Spark is an open-source cluster computing framework that can be used to process large amounts of data in real-time.

- Apache Druid: open-source real-time analytics database

- Apache Beam: open source framework for defining batch and streaming data-parallel processing pipelines, with execution on a supported distributed processing back-ends (such as Apache Flink, Apache Spark, and Google Cloud Dataflow).

- Imply: data platform built on Apache Druid

- Apache Pinot: An open-source distributed columnar storage engine that provides low-latency querying capabilities for real-time analytics with high scalability and fault tolerance

- Confluent data streaming platform: managed streaming platform built on Apache Kafka and Apache Flink that provides additional features, management tools, and integrations to support large-scale streaming data pipelines and stream processing.

- Google Cloud Dataflow: managed Apache Beam service

- Amazon Kinesis: managed service for real-time streaming data

- Microsoft Azure Stream Analytics: managed service for real-time streaming data processing

- StarTree: support for analysis & visualization of large-scale, time-series data

- Rockset: indexing and query service supporting real-time analytics on structured and semi-structured data

Why Streaming Analytics With Confluent

The Confluent data streaming platform empowers organizations to build real-time streaming data pipelines with reliability, scalability, and ease of integration. Learn how we help organizations across industries overcome common analytics challenges and unlock more responsive, data-driven operations.

Confluent offers Apache Kafka, a full-featured data streaming engine, with self-managed and fully managed deployment options ready to span hybrid and multicloud environments. As a managed service, Confluent not only completes Kafka with additional, enterprise-grade features for security, a centralized control plane for management and monitoring Kafka clusters and connectors, and pre-built integrations to connect Kafka with other applications. We've also rebuilt Kafka to create Kora, a cloud-native Kafka engine that makes Confluent Cloud faster, more cost-effective, and more reliable. These features enable businesses to access, store, and manage data more easily as continuous, real-time streams.

To facilitate data connectivity within an organization, Confluent offers a wide range of data connectors that seamlessly ingest or export data between Kafka and other data sources or sinks. These include Kafka Connect (an open-source framework for building and running Kafka connectors), Confluent Connectors (Confluent-supported connectors for JDBC, Elasticsearch, Amazon S3 Connector, HDFS, Salesforce, MQTT, and other popular data sources), Community Connectors (contributed and maintained by the community members), and Custom Connectors (built by an organization’s own developers).

Confluent also offers a range of features to protect and audit sensitive data and prevent unauthorized access.

Building on Kafka’s capabilities, Confluent also delivers real-time analysis of data streams with:

- Kafka Streams: a lightweight Java library that is tightly integrated with Apache Kafka. With it, developers can build real-time applications and microservices by processing data directly from Kafka topics and producing results back to Kafka. Because it's part of Kafka, Kafka Streams leverages the benefits of Kafka natively.

- Apache Flink: open-source stream processing framework that supports event time processing, stateful computations, fault tolerance, and batch processing. Flink provides a distributed processing engine that can handle large-scale data streams and offers advanced features like event-time processing, exactly-once semantics, and support for various data sources and sinks, along with support for fault tolerance and exactly once delivery.

These options enable a wide range of processing and analytics requirements, scalability needs, and real-time analytics architectures; an organization can build on a single platform as its needs change or their complexity increases. For example, for more complex scenarios, Kafka and Flink are often used together when analytics processing generates large intermediate data sets or require a full range of SQL capabilities.

Ready to learn more?