Ahorra un 25 % (o incluso más) en tus costes de Kafka | Acepta el reto del ahorro con Kafka de Confluent

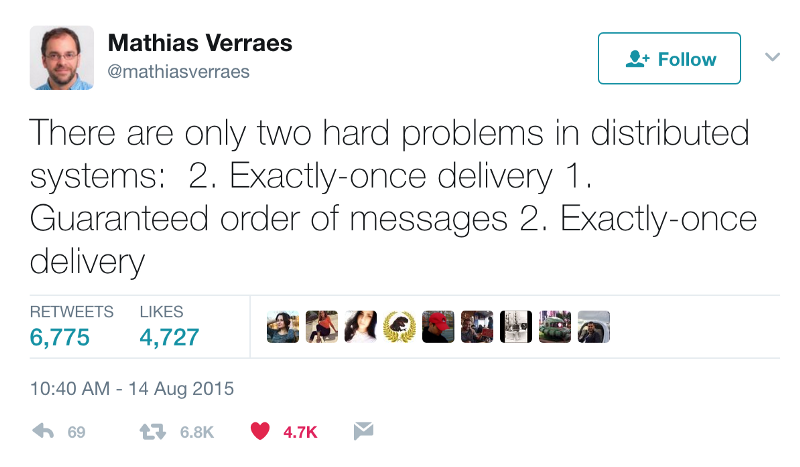

Delivery Guarantees and the Ethics of Teleportation

Distributed systems have to deal with the problem of what delivery guarantees to support. We have various options ranging from no guarantee to exactly once. Depending on what option we choose, it can have an impact on the quality of our data streams. In this post, we will be looking at various delivery guarantees using teleportation as an example.

The Ship of Theseus is a thought experiment that poses the question: If we replace every part of a ship with new components, is it still the same ship?

It’s a question that comes up often when people discuss the idea of teleportation in science fiction. However, let’s be specific. We’re not talking about the kind of teleportation that punches a hole in space and time, allowing the person to simply step through. Instead, we are discussing the sort of teleportation where the person is dematerialized atom by atom and then rematerialized in a new location.

Following the Ship of Theseus line of reasoning, this begs the question: If we replace every atom of a person with entirely new atoms, have we essentially killed them and replaced them with a clone?

This post isn’t going to try to resolve the Ship of Theseus question. Instead, we want to approach the idea of teleportation using a different, but potentially related thought experiment known as the Two Generals Problem.

The Two Generals Problem

The setup for The Two Generals Problem is fairly simple. Two generals are on opposite sides of an enemy. They are each nestled deep in their own valleys and the only way to communicate is by sending messengers through enemy territory. Those messengers may get captured or killed. So they are unreliable.

The two generals are each leading armies that are outnumbered. If either of them attacks the enemy alone, then they are guaranteed to fail. They can only succeed by coordinating their attack. Reliable communication is key to this coordination.

Let’s name our generals Kirk and Picard. Imagine Kirk sends a message to Picard indicating that they should attack at noon. There is no guarantee the messenger will arrive. However, Kirk doesn’t want to attack if he isn’t confident that Picard will be there to support him.

To solve this, Kirk can request an acknowledgment. When Picard receives the message he will send his own messenger back to Kirk to indicate he agrees with the time. This sounds simple enough. Except, the acknowledgment must also cross enemy territory and might get lost. This means Picard won’t know whether Kirk received the message. He can’t be confident that Kirk will attack at the appointed time. The only way he could be sure is to request another acknowledgment, which once again must cross enemy lines.

This puts both Kirk and Picard in a difficult position. Neither can be confident the other has received all of their messages. No matter how many acknowledgments you send, you always need at least one more. In other words, you can’t completely eliminate the risk.

This highlights our basic problem: If you communicate over an unreliable channel, then you can’t make guarantees about whether a message will be delivered.

Is teleportation reliable?

Now, let’s go back to science fiction. The question is whether teleportation in science fiction uses a reliable communication mechanism. Obviously, this depends a lot on the particular work of fiction. But in general, we think we can say the answer is usually no.

We’ve seen examples where ships attempting to use teleportation have to go to extreme lengths to cut through the interference of local radiation. Sometimes, they have to resort to sending a landing party because the signal can’t penetrate a planet’s atmosphere. There are examples across all kinds of fiction where a teleportation malfunction results in people being duplicated or even merged with other objects and creatures.

The truth is that unreliable communication makes good stories. So, although some fiction might propose new science that ensures reliable communication, often that’s not the case.

The question is: What are the consequences of this uncertainty?

At most once delivery

Suppose Picard decides to send his first officer Will down to the surface of an unknown planet. This planet is giving off strange radiation that is interfering with the signal. What should Picard do?

One option is to make the best effort. The teleportation is initiated and Picard prays that Will arrives safely. The teleporter dematerializes him on one end (Is he dead at this point?) and then attempts to rematerialize him at the destination (Is this still the same Will?).

But remember, the communication channel is unreliable. There’s no guarantee that Will arrived at the destination.

If he didn’t, and we don’t do something about it, then Will is gone, lost inside of the teleportation data stream.

So what should Picard do?

The logical answer is for Will to send an acknowledgment that he has arrived safely. If the proper acknowledgment is received then the data stream can be purged and the teleportation is finished.

Except there’s still that pesky radiation interfering with the acknowledgment. If the acknowledgement doesn’t arrive, then Picard won’t know whether Will is safe or not.

If he assumes Will is safe only to discover he was wrong, then Will is dead because the teleportation was unsuccessful.

Now, Picard could assume that Will didn’t make it to the planet and try to rematerialize him back on the ship. That way, if the teleportation did fail, Will won’t die. But on the other hand, if the teleportation succeeded, then we’ll end up with two copies of Will, one on the planet, and one on the ship.

Clearly, neither option is very good.

At least once delivery

One possible solution is to keep retrying until the teleport succeeds.

When no acknowledgment is received, the teleport is retried. The data in the stream can be used to essentially restart the operation. This can be repeated until a signal is received to indicate that the teleport was successful.

Theoretically, it’s still possible that it never succeeds, which would leave poor Will back in teleporter limbo. In that case, maybe Picard could set a maximum of five attempts and if all of them fail, Will is rematerialized back on the ship.

But this creates two problems (or far more than that). Remember, unreliable communication goes both ways. We don’t know whether Will failed to teleport, or whether he succeeded and failed to send an acknowledgment. If the teleport failed, there’s no harm in retrying, but if it succeeded, things get more complicated. In that case, retries will either create duplicates of Will or they will fuse copies of him together as they are teleported to the same location multiple times. Either we now have five copies of Will on the planet or one copy that resembles something from a horror movie.

To add to the confusion, if the maximum number of retries is exceeded, then a sixth copy of Will would have been created back on the ship.

This is definitely not what Picard and Will were looking for. So what should they do?

Exactly once/Effectively once delivery

What they really want is a guarantee that the teleportation succeeded once and only once. There shouldn’t be any doubt. Unfortunately, the Two Generals Problem shows that this level of certainty is impossible when using an unreliable communication channel.

In that case, the best hope is to apply some deduplication logic. In other words, keep performing the teleportation until it succeeds, accepting that it will produce duplicates. Then dealing with those duplicates.

The problem here is that dealing with those duplicates is a messy business. Essentially, you have to kill all but one of the copies. If Picard didn’t kill Will simply by teleporting him, he definitely is now.

Is teleportation ethical?

So is a teleporter ethical? There are three possible outcomes:

Accept that sometimes teleporters fail, resulting in the death of the intended crew member

Accept that teleporters are guaranteed to succeed as long as you are willing to risk creating multiple copies of the crew

Accept that in order to avoid creating multiple copies, you have to be willing to kill any duplicates

Clearly, none of these options is ideal. You either knowingly killing people or knowingly creating duplicates of them. But of the available options, the most ethical choice might be to accept that sometimes teleportation will fail. Getting on a starship comes with risks and the crew must be ready to accept that. But maybe the risk could be reduced. For example, maybe Picard shouldn’t try to teleport Will to the planet with the strange radiation. Maybe the teleporter should just abort if it detects any chance of failure. That would be boring TV but has much better results for Will and the rest of the crew.

Conclusion

In modern software development, this isn’t a problem. Teleportation technology is a long way off. But developers are faced with the reality of the Two Generals Problem every day. Modern networks are unreliable. Any time a message is sent over those networks there is a chance of failure. This leaves developers with the same choices that need to be made with teleportation. They can either accept the risk of losing messages, recognize that messages will be duplicated, or find some way to handle the duplicates.

In the software world, this is rarely an ethical dilemma. Deduplicating messages won’t usually result in someone dying. But there are cases where this becomes a more complicated decision. Financial transactions could be very dangerous if they were duplicated or lost. Great care must be taken to ensure that doesn’t happen. Similarly, a medical device that is designed to give doses of medication to a patient could cause death if a dose is lost or duplicated, and you can’t undo a dose. In these situations, developers have to be very careful to ensure that messages are being received the right number of times. They may have to make an ethical decision about how to deal with an unexpected failure.

The reality is that working in distributed systems is hard. But at Confluent, we want to try to make it as easy as possible for you to deal with. It’s why we have transactional guarantees in Apache Kafka®. If you want to learn more about how to implement different delivery guarantees using Kafka and .NET, check out our Apache Kafka for .NET Developers course.

¿Te ha gustado esta publicación? Compártela ahora

Suscríbete al blog de Confluent

Exactly-Once Semantics Are Possible: Here’s How Kafka Does It

I’m thrilled that we have hit an exciting milestone the Apache Kafka® community has long been waiting for: we have introduced exactly-once semantics in Kafka in the 0.11 release and […]

Building Event Streaming Applications in .NET

In this post, we introduce how to use .NET Kafka clients along with the Task Parallel Library to build a robust, high-throughput event streaming application...