Ahorra un 25 % (o incluso más) en tus costes de Kafka | Acepta el reto del ahorro con Kafka de Confluent

Kafka, Flink, and Beyond! Here’s What Happened at Current 2023

We’re back for another round!

This week, Current | The Next Generation of Kafka Summit kicked off year two of its run as the industry conference for all things Apache Kafka® and real-time data streaming. Hosted in San Jose, the summit welcomed over 2.1k in-person attendees (and 3.5k+ who joined virtually), showcased two major keynotes, and played host to over 100+ breakout sessions led by subject matter experts from nearly every major industry. Ready for the instant replay? Let’s dive in.

Day One Keynote: Streaming Into the Future: The Evolution and Impact of Data Streaming Platforms

Jay Kreps, original Kafka co-creator and Confluent CEO, opened the keynote by talking about what inspired him to work on the first version of Kafka at LinkedIn. “A business is fundamentally an activity happening in reality. It’s continuous. It happens all day,” he said. “Yet for us, all the most sophisticated data processing, all the best things we could do with data, were happening in batch.”

Jay went on to describe the mess that occurred as a result of this disconnect and the various tools people used to remedy it—tools that each came with noteworthy limitations. Data streaming existed, but it was thought of as a “specialized niche,” he elaborated. But things have since changed, with data streaming becoming an ubiquitous part of nearly every major organization’s approach to real-time data. Just how ubiquitous? “This is a technology that is going to be a fundamental part of data architecture…for all types of data in every organization, for all the data on earth,” Jay said.

But what about data from space? NASA weighs in

Joe Foster, Cloud Computing Program Manager at NASA, joined Jay on stage to talk about how NASA’s GCN (Global Coordinates Network) project uses data streaming to allow observatories all over the world to publish alerts in real time when they witness any kind of transiting phenomenon in the sky. “You can subscribe to real-time alerts and if you’re in the area of observation, you can go outside and witness (the phenomenon), and we can crowdsource these observations. It’s a capability that we didn’t have before,” Joe explained.

The keynote was chock full of plenty more exciting discussions and demonstrations, including:

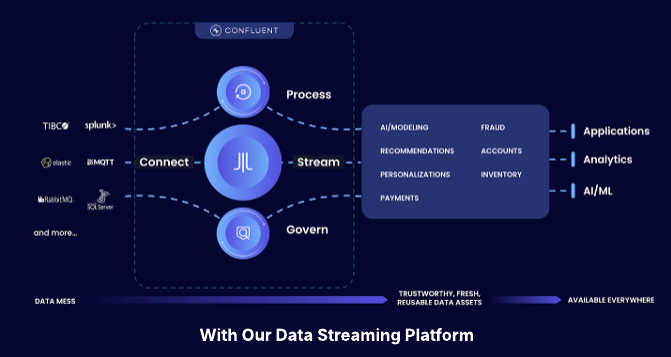

A deep dive on the essential capabilities of a data streaming platform (DSP) and why DSPs are the key for organizations looking to revolutionize their relationship with data

Conversations with Warner Bros. Discovery and NOTION about their latest data streaming use cases

Multiple demos, including one for data governance and another for stream processing with Flink

You can watch the full day one keynote here to see it all.

Day Two Keynote: Kafka, Flink, and Beyond

Danica Fine, Staff Developer Advocate at Confluent and program committee chair for Current 2023, opened the day two keynote with a shoutout to the crowd. “Without you—without all of you attending this conference, without all of our talented speakers…, and without the community supporting us—we wouldn’t be here today,” she said. “But equally important to the people that helped make this conference possible are the technologies represented here. Current was founded with Apache Kafka at its core,” Danica said, before acknowledging that Kafka (although very important) is just one piece in the overall data streaming picture.

And while she was quick to highlight the over 30 data streaming technologies covered across 84 breakout sessions during the summit, Danica noted that the main focus of today’s keynote (aside from Apache Kafka) would be data processing’s rising star, Apache Flink®. And with that, the audience was well-prepped for what came next: an action-packed rundown of the latest trends, updates, and future developments in the worlds of Kafka and Flink—with a few analogies about how the pairing is simply meant to be (like PB&J).

What makes Flink stand out?

We heard next from Martijn Visser, Senior Product Manager at Confluent, about the top three reasons he thinks Flink has become the de facto standard for stream processing. Martijn cited:

Flink’s broad set of APIs

Its low latency, high-throughput stream processing runtime

The robustness of the Flink community

“Flink offers unified batch and stream processing APIs in Java, Python, and SQL. These are layered APIs at different levels of abstractions, meaning you can handle both common and specialized use cases,” Martijn said.

The latest on Kafka

Next up was Ismael Juma, Senior Principal Engineer, at Confluent, who shared the latest exciting changes to the Kafka project. Top of mind was KRaft—Kafka’s new metadata layer that is built on a replicated log. “This has been a multi-year project with contributions from a variety of individuals and companies, and it brings significant benefits: it is simpler to manage (a ZooKeeper cluster is no longer required), it's more scalable (the maximum number of supported partitions has increased by an order of magnitude) and it's more resilient (a number of edge cases have been addressed),” Ismael said.

Kafka: What’s on the horizon?

The short answer to “What’s on the horizon for Kafka?” A lot.

We can’t cover everything here, but have included a few highly anticipated developments below. We definitely recommend you watch the recorded keynote to get the full scoop!

Simplified Protocol, Better Clients: It’s critical that the Kafka protocol evolve with compatibility in mind to set it up for success in the next 10 years. With that in mind, we have to simplify the client protocol. We’re taking a step in the right direction with KIP-848.

Support Participation in Two-Phase Commit: KIP-939 will address challenges around integrating events into apps and keeping your events and the database state in sync.

Docker Images & GraalVM Support: There is a proposal for using GraalVM’s native image for faster start-up, lower memory usage, and smaller images. Check out KIP-974 and KIP-975 for some of the promising results of initial experiments

Queues for Kafka: Queues for Kafka elegantly enriches the Kafka protocol and client APIs to support additional cases. See KIP-932 to learn more.

And more!

BMW’s massive data streaming win

The day two keynote also showcased some major companies who are taking Kafka to the next level. Tobias Nothaft, Sub Product Owner, Streaming Platform at BMW, joined us to share how his team provides platform and infrastructure services for Kafka to internal users at BMW. One of the noteworthy results?

“R&D is, for example, providing lots of valuable event streams with parts master data or the bill of materials. And sales use a lot of the data to offer the right products and keep up to date with order information for our end customers. This means without Kafka, our customers would never get a car,��” Tobias said.

You can watch the recorded day two keynote here.

Welcome to the show!

The showroom floor at the McEnery Convention Center in San Jose was packed with a who’s who of industry-leading technology providers and thought leaders in the data streaming space. At the Confluent booth, we had Kora, the Apache Kafka engine that powers Confluent Cloud to be a cloud-native, 10x Kafka service on full display.

We also showcased an exciting demo on how you can take high-quality, trusted data from Confluent Cloud and share it downstream to a Rockset vectorDB that powers a GenAI application. People who attended the demo learned why we see data streaming as key to the AI and Gen AI trend. The reason? You can’t train or infer on a proper AI model without real-time, high-quality, trusted data that’s delivered across all the hundreds of systems, apps, databases, etc. in the enterprise. Stay tuned to this blog for more from us in the AI space…and check out our existing webinar and blog post to learn how to harness ChatGPT to build real-world use cases and integrations in your organization.

There’s always time for fun

Would it really be a tech conference if there weren’t some games? We know the answer to that question. That’s why we spent plenty of time in the Kafka Arcade space trying to get top score on Ms. Pac Man.

And when we failed at that, it was time to head to the Flink Forest for some networking and a few rounds of cornhole.

Session and Panel Highlights

There’s no way we could recap all the sessions and panels that took place at Current this year in a single blog post (there were a ton!). You can check back here in a week to watch all the session recordings. But in the meantime, we’ll give you a quick taste of a couple standouts.

Session: Flink Power Hour

During this jam-packed hour of Flink-centered fun, we got to hear from three speakers on a variety of Flink topics:

Timo Walther, Principal Software Developer I at Confluent, gave us a rundown on what Apache Flink is used for and a demo that showed the various capabilities of Flink Table API, which can be found here

David Anderson, Software Practice Lead at Confluent, presented an overview of Flink SQL joins

David Moravek, Staff Software Engineer II at Confluent, discussed how Flink’s adaptive scheduler has now reached a maturity stage where it can be considered the new default for data streaming

Panel: Women in Technology

Did you know that women make up only 28% of professionals in the tech industry? Thankfully we had some powerful women executives present at Current this year who are committed to breaking down barriers for other women interested in working in tech!

Our Women in Tech panelists were Denise Hemmert, VP of Enterprise Enablement Services at Cardinal Health, Joey Fowler, Senior Director of Technical Services at Denny’s, Mona Chadha, Director of Infrastructure Partnerships at AWS, Sharmey Shah, AVP, Sales-East at Confluent, and Shruti Modi, director of Data Platform at Penske Transportation Solutions.

Moderated by Stephanie Buscemi, Confluent’s very own CMO, this panel discussion touched on topics like:

Finding strong mentors in the workplace

How to remain authentic and kind while focusing on cognitive diversity

How to mentor other women and help them overcome self doubt

Identifying safe people and allies

A standout quote from Sharmey Shah: “Physical diversity is important, but prioritize building a team of individuals that think differently. The idea is that the strongest teams bring different ideas to the table…and the other diversity factors will align accordingly.”

That’s a wrap…but we’ll see you soon!

Thank you to everyone who joined us at Current 2023 this year! We hope to see you at our next events in 2024:

Kafka Summit | March 19-20 | ExCel London | London, UK

Current | September 17-18 | Austin Convention Center | Austin, TX

Stay tuned to this space for when registration goes live!

For now, you can…

¿Te ha gustado esta publicación? Compártela ahora

Suscríbete al blog de Confluent

Confluent Recognized in 2025 Gartner® Magic Quadrant™ for Data Integration Tools

Confluent is recognized in the 2025 Gartner Data Integration Tools MQ. While valued for execution, we are running a different race. Learn how we are defining the data streaming platform category with our Apache Flink® service and Tableflow to power the modern real-time enterprise.

IBM to Acquire Confluent

We are excited to announce that Confluent has entered into a definitive agreement to be acquired by IBM.