Ahorra un 25 % (o incluso más) en tus costes de Kafka | Acepta el reto del ahorro con Kafka de Confluent

How to Better Manage Apache Kafka by Creating Kafka Messages from within Control Center

Managing Apache Kafka® clusters can be tricky sometimes. To solve this problem, Confluent Control Center helps you easily manage and monitor your clusters and interact with other Confluent components, such as ksqlDB and connectors. In other words, Confluent Control Center is a powerful UI to oversee existing cluster resources and set up new ones. If you already have Kafka clusters and surrounding components set up, Control Center is the cherry on top to complete the entire ecosystem. If you are new to Confluent and Kafka, Control Center is a great entry point to explore various resources visually and easily.

Confluent Platform 6.2.0 introduces some new features to Control Center that make managing clusters an even smoother experience. Here are a few highlights that we are excited to share with you today:

- Create Kafka messages (with key and value) directly from within Control Center

- Export Kafka messages in JSON or CSV format via Control Center

- Improved topic inspection by showing the last time that a message was produced to a topic

- Remove residue data from old Control Center instances with a cleanup script

This blog post is the first in a four-part series that discusses the above features in detail. If you are not too familiar with Control Center, please refer to the Control Center overview first. Having a running Control Center instance at hand helps you explore the features discussed in this blog series better.

Now that you are ready, let’s delve into the first feature: creating Kafka messages from within Control Center.

- What “creating Kafka messages” is: A description of the feature and its intended audience

- How to produce messages in Control Center: Step-by-step tutorial on how to produce messages

- Important caveat: Mismatching JSON schemas: Learn about an important case to note when producing messages

- Important caveat: Null key and value: Learn about setting null for topics with different cleanup policies

- More ways to produce messages: Learn about other ways to produce messages

What “creating Kafka messages” is

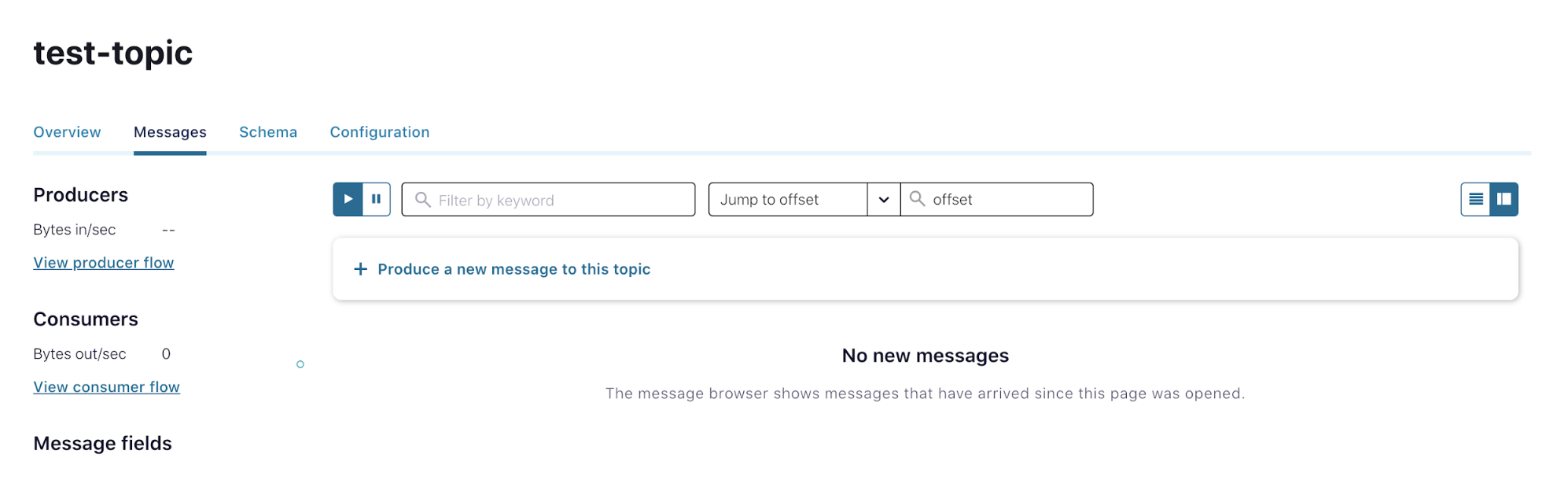

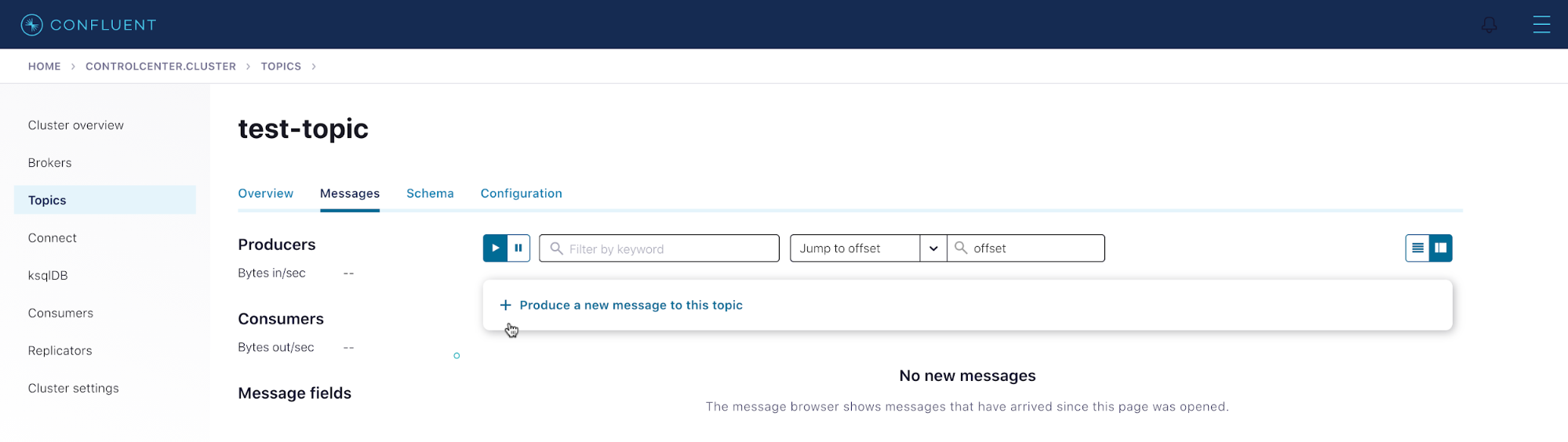

Prior to 6.2.0, only Confluent Cloud provided single-message producer functionality that allows producing messages in the Topic window, as shown below. This is perfect for producing individual messages to a topic from the Control Center UI to see the topic “working.” However, the built-in producer only allows you to specify the value of a message, not the key. Version 6.2.0 enhances the current single-message producer to support both key and value specification, and it also introduces this functionality to Confluent Platform, our on-prem solution.

To learn more about the outdated Confluent Cloud value-only, single-message producer, see the documentation.

How to produce messages in Control Center

The following steps are used to produce individual messages from within Control Center:

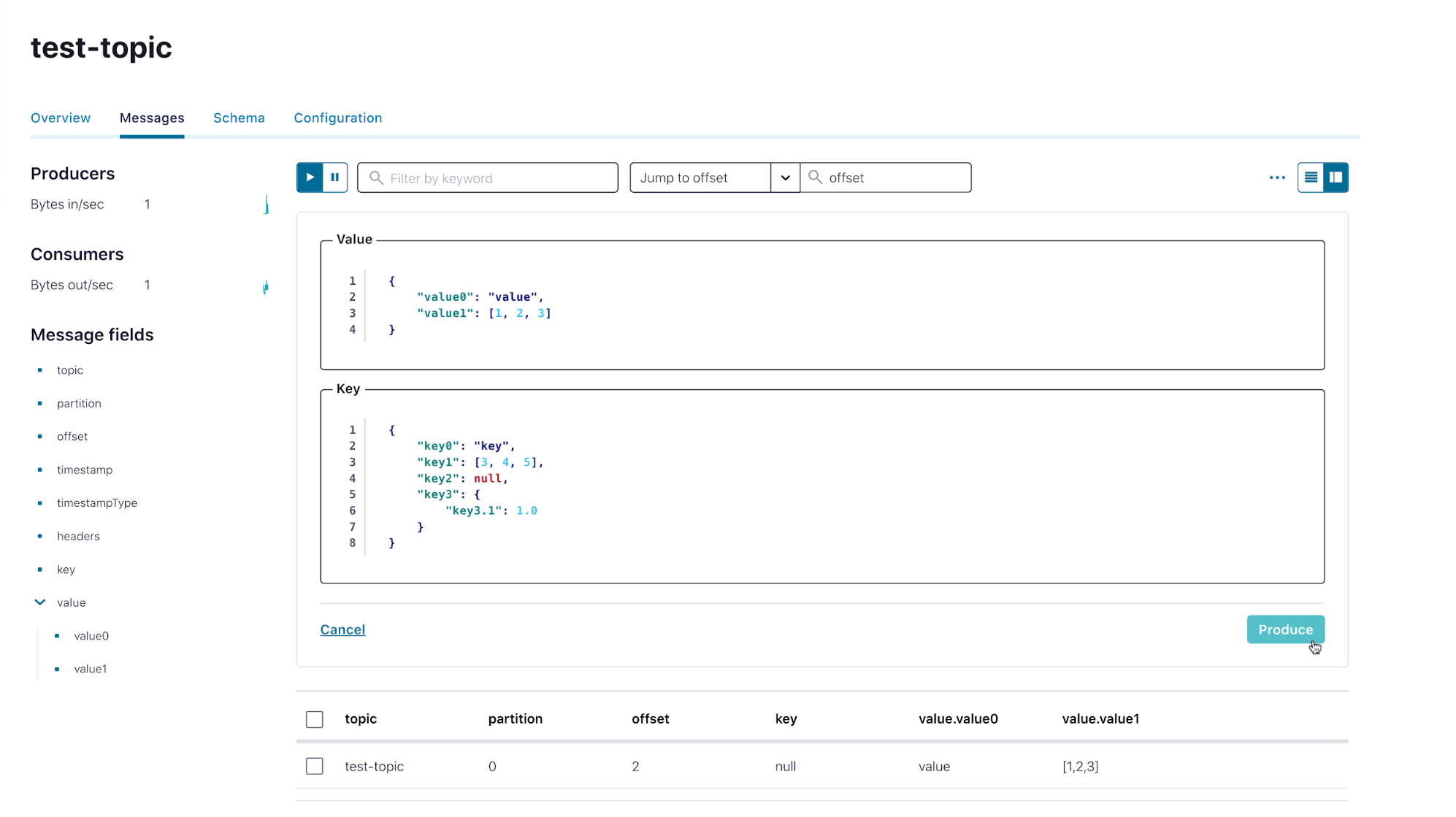

- First, choose a topic and click on the Messages tab. Click on + Produce a new message to this topic to expand the window.

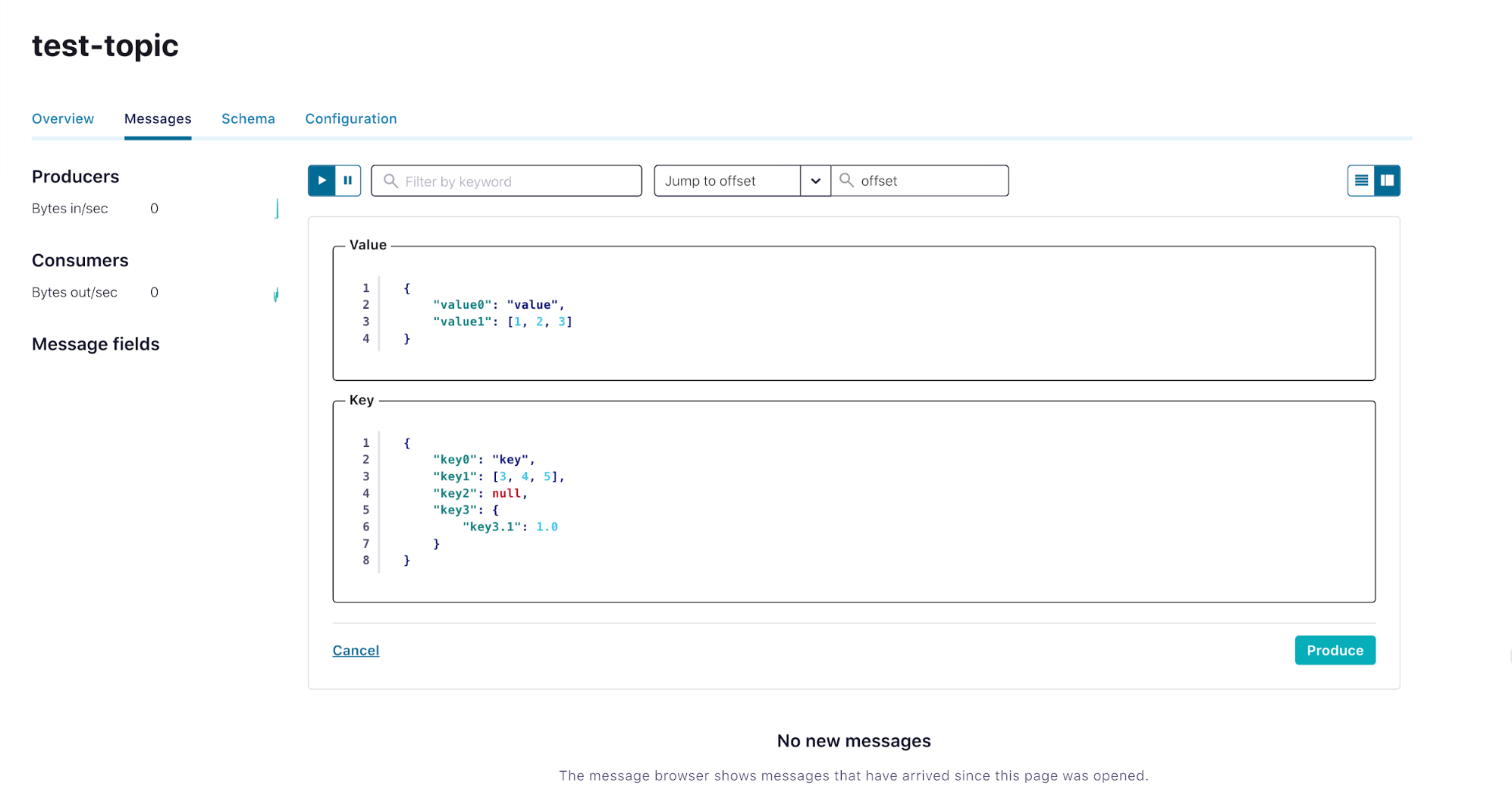

- Enter valid JSON objects for the key and value, and then click on Produce.

- Here’s an example of a message with a more complex key:

- See the message generated below. Note: It can take a few seconds for the message to be populated on the UI.

Important caveat: Mismatching JSON schemas

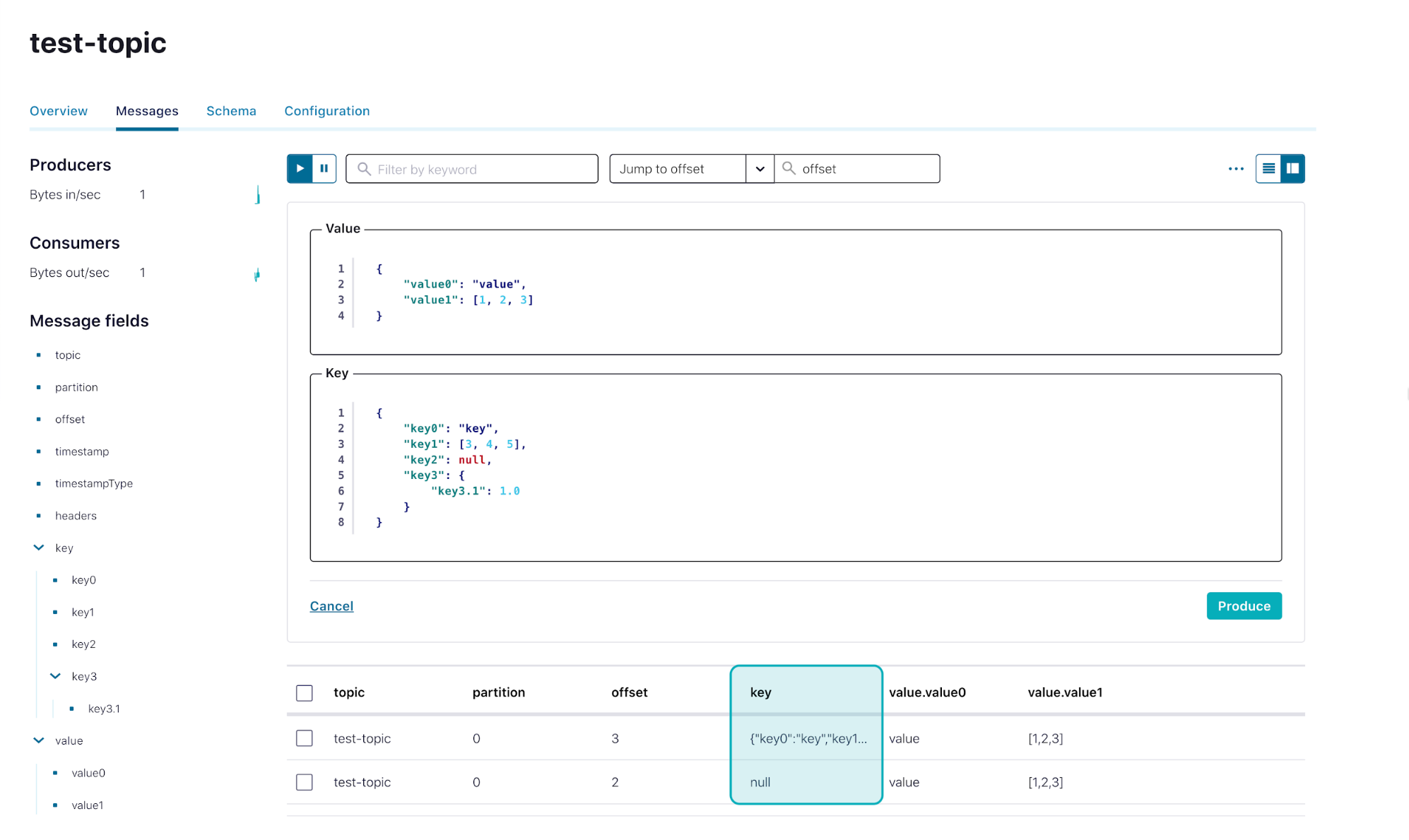

It is important to note that if you try to consecutively send messages with different JSON schemas, the messages will be produced without errors but the UI will not be able to show the messages’ fields correctly.

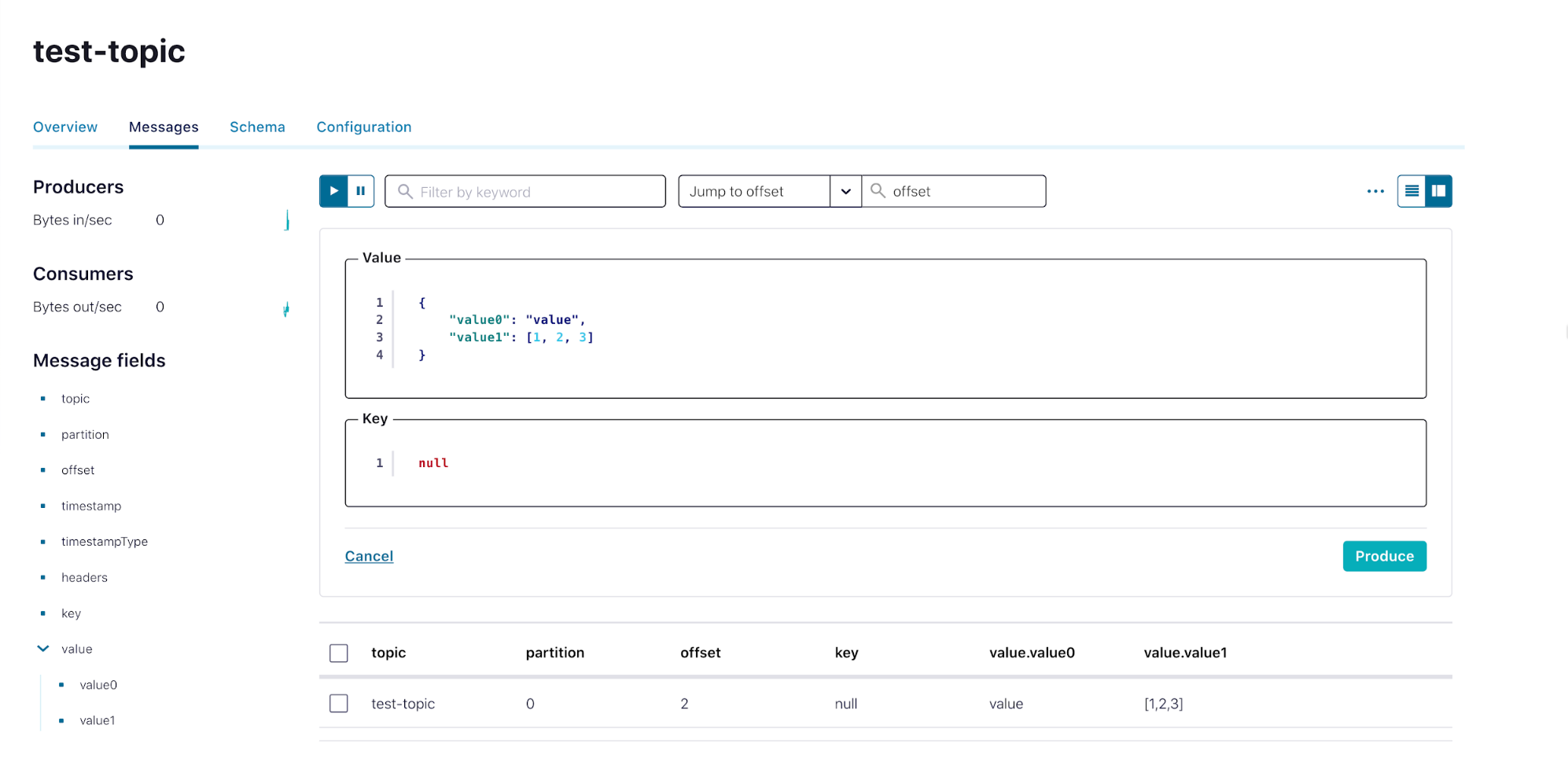

Assume that a message with the null key and {“value0”: “value”, “value1”: [1,2,3]} value has been produced, and now a second message is about to be produced. The first message had three fields as shown at the bottom of the image below: key, value.value0, and value.value1. The second message is about to be produced with the same value fields as before, but the key now has key.key0, key.key1, key.key2, and key.key3 fields.

After producing the second message, you should notice that the UI does not accurately display the second message’s key fields, as shown below. The JSON schema mismatch between the two consecutive messages leads to the UI not accurately displaying the second message’s fields. This happens because the Control Center UI is dominated by the first message’s JSON schema.

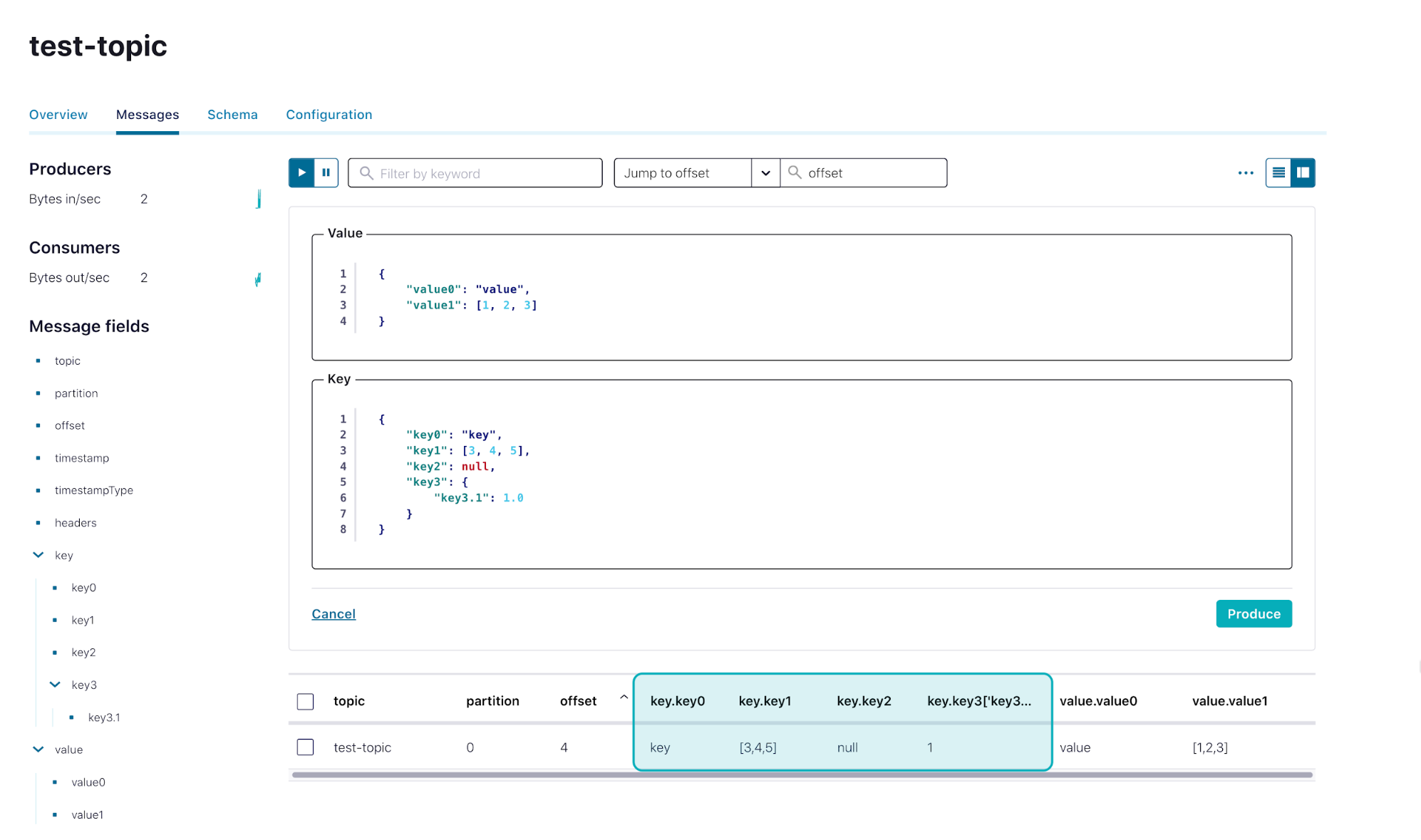

You can fix this by refreshing the page. For example, click on Overview, return to the Messages tab, and try producing the same message again. The fields are now corrected.

Important caveat: Null key and value

Depending on your topic’s cleanup policy, the values that you can specify for the key and value fields change:

- If your topic has a deletion cleanup policy, then both the key and the value can be null.

- If your topic has a compaction cleanup policy, then the key should never be null, but the value can be null. This is a behavior defined by Kafka, where the null key in compacted topics results in exceptions. If a null key is provided, then no record will actually be produced in the UI as it is not allowed. If null value is provided, then the generated message will act as a tombstone marker for key deletion, as defined in Kafka:

“(with compaction cleanup policy)…A message with a key and a null payload will be treated as a delete from the log. Such a record is sometimes referred to as a tombstone. This delete marker will cause any prior message with that key to be removed (as would any new message with that key), but delete markers are special in that they will themselves be cleaned out of the log after a period of time to free up space.”

More ways to produce messages

Beyond producing messages from within Control Center, here are a few other ways to produce data to topics.

Confluent Platform CLI command

confluent local services kafka produce is intended as a command to send customized, simple messages in a single-node local development environment. For more information, please refer to Confluent CLI command documentation. For the Confluent Cloud CLI counterpart, please refer to Confluent Cloud CLI command documentation.

bin/confluent local services kafka produce test-topic --bootstrap-server localhost:9092 >message1 >message2

Confluent Platform CLI tool

bin/kafka-console-producer is intended as a tool that sends customized, simple messages to topics through the command line, though it is not suitable for production environments. You can also use its Avro counterpart, bin/kafka-avro-console-producer, to send Avro data in JSON format. For more information, check out Kafka Tutorials and these Confluent Platform CLI tools. Note that this tool is currently only shipped with Confluent Platform, not Confluent Cloud.

bin/kafka-console-producer --topic test-topic --bootstrap-server localhost:9092 >message1 >message2

Kafka Connect Datagen Source Connector

The Kafka Connect Datagen Source Connector is intended as a demo source connector to auto generate sample data, though it is not suitable for production environments.

- If you are using Confluent Community features, aka without Control Center, follow this Quick Start for Apache Kafka to set up your Datagen connector.

- If you are using Confluent commercial features, aka with Control Center, follow this quick start to set up your Datagen connector.

Summary

In summary, creating Kafka messages from within Control Center allows you to produce simple messages with your desired key and value. Except for some caveats to keep in mind, you can now use this easy tool to produce simple messages through the UI and play around with a Kafka topic.

If you haven’t done so yet, Download Confluent Cloud or Confluent Platform today to get started using Control Center.

Other articles in this series

To learn about other new features of Control Center 6.2.0, check out the remaining blog posts in this series:

¿Te ha gustado esta publicación? Compártela ahora

Suscríbete al blog de Confluent

Empowering Customers: The Role of Confluent’s Trust Center

Learn how the Confluent Trust Center helps security and compliance teams accelerate due diligence, simplify audits, and gain confidence through transparency.

Unified Stream Manager: Manage and Monitor Apache Kafka® Across Environments

Unified Stream Manager is now GA! Bridge the gap between Confluent Platform and Confluent Cloud with a single pane of glass for hybrid data governance, end-to-end lineage, and observability.