Ahorra un 25 % (o incluso más) en tus costes de Kafka | Acepta el reto del ahorro con Kafka de Confluent

Confluent + Immerok: Cloud Native Kafka Meets Cloud Native Flink

I’m incredibly excited to announce that we’ve signed a definitive agreement to acquire Immerok, a startup offering a fully managed service for Apache Flink. They’ll be joining Confluent to help us add a fully managed Flink offering to Confluent Cloud. This is a very exciting step for Confluent and I wanted to explain a little bit about our strategy with respect to stream processing, the Immerok team, and why we’re so excited about Flink.

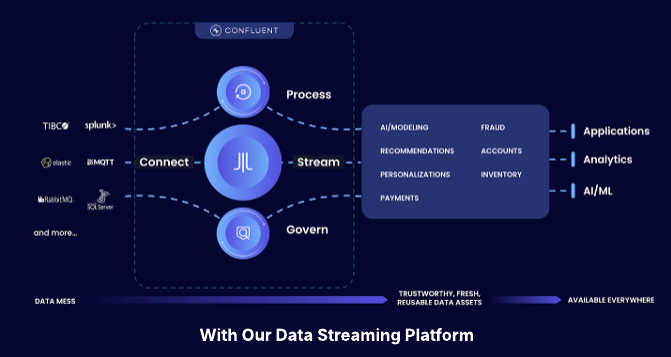

Let me start with why Confluent is focused on stream processing. Our mission is to set data in motion—that is, to make streaming data the new default and make the data streaming platform a centerpiece of modern data architectures. But to make streaming the default we need to make it easy! This has two parts—making it operationally easy to get streaming capabilities on demand, and making the development of applications that use streams as easy and natural as batch processing or any other modern application. On the operations side we’ve tackled that with Confluent Cloud, which lets customers get an incredible data streaming platform centered around Apache Kafka as an elastic, scalable, secure service. But to make streaming the default we need to make the development with data streams, that is, stream processing, just as easy.

Confluent has long helped contribute to the emerging stream processing ecosystem around Kafka with Kafka Streams, KSQL, and some of the underlying transactional capabilities in Kafka that help enable correctness for all streaming technologies.

Why add Flink? Well, we’ve watched the excitement around Flink grow for years, and saw it gaining adoption among many of our customers. Flink has the best multi-language support with first class support for SQL, Java, and Python. It has a principled processing model that generalizes batch and stream processing. It has a fantastic model for state management and fault tolerance. And perhaps most importantly, it has an incredibly smart, innovative community driving it forward. In thinking about our cloud offering and what we wanted to do with stream processing, we realized that offering a Flink service would help us provide the interfaces and capabilities that our customers wanted, and could serve as the core of our go-forward stream processing strategy.

In short: we believe that Flink is the future of stream processing. We see this already in the direction of open source adoption, the number of commercial product offerings, and in what we hear from our customers. In the same way that Kafka is the clear de facto standard for reading, writing, and sharing streams across an organization, Flink is on a trajectory to be the clear de facto standard for building applications that process, react, and respond to those streams.

That brings me to why we are so excited about the Immerok team. First, what they’ve built: they have a team that has done incredible work helping to build Flink and grow its community. They’ve used that expertise to build the world’s most advanced Apache Flink service. They are also a group of smart, humble people who have big plans, so they’ll fit right in at Confluent.

Of course we continue to invest in the native functionality in Kafka streams, KSQL, as well as support the integration with external systems like Spark, Materialize, and others. Kafka Streams in particular has proved immensely successful as a basis for simple event-driven apps and has a key place in the Kafka ecosystem. We see an open ecosystem around streaming and seek to encourage this to flourish.

Our efforts will not just be around our product. We think the power of these technologies comes from their communities. We’ll contribute to Flink just as we have with Kafka. The Flink community is thriving but we think we can help contribute to that as well. Our goal, after all, is to help make stream processing and event-driven applications the default mechanism for working with data. And this is very much an effort that takes a whole community, not just a company.

In terms of our product plans, we plan to launch the first version of our Flink offering in Confluent Cloud later this year, starting with SQL support and extending over time to the full platform. We want to follow the same key principles we’ve brought to our Kafka offering: building a service that is truly cloud native (not just hosting open source on cloud instances), is a complete and fully integrated offering, and is available everywhere across all the major clouds. Beyond this we think the huge opportunity is to build something that is 10x simpler, more secure, and more scalable than doing it yourself. We think we can make Kafka and Flink work seamlessly together, like the query and storage layer of a database, so that you don’t have to worry about all the fiddly bits to manage, secure, monitor, and operate these layers as independent systems. You can just focus on what really matters—building the cool real-time apps that are the real value.

We couldn’t be more excited about this direction and the addition of the Immerok team to Confluent. We think the deep expertise and technology they have around Flink, and our ability to integrate that into our service put us in a position to continue to redefine the standard for what a data streaming platform is. I hope you are as excited about this as I am. Stay tuned for what’s to come.

¿Te ha gustado esta publicación? Compártela ahora

Suscríbete al blog de Confluent

Confluent Recognized in 2025 Gartner® Magic Quadrant™ for Data Integration Tools

Confluent is recognized in the 2025 Gartner Data Integration Tools MQ. While valued for execution, we are running a different race. Learn how we are defining the data streaming platform category with our Apache Flink® service and Tableflow to power the modern real-time enterprise.

IBM to Acquire Confluent

We are excited to announce that Confluent has entered into a definitive agreement to be acquired by IBM.