Ahorra un 25 % (o incluso más) en tus costes de Kafka | Acepta el reto del ahorro con Kafka de Confluent

Confluent

Data Products, Data Contracts, and Change Data Capture

Change data capture is a popular method to connect database tables to data streams, but it comes with drawbacks. The next evolution of the CDC pattern, first-class data products, provide resilient pipelines that support both real-time and batch processing while isolating upstream systems...

Unlock Cost Savings with Freight Clusters–Now in General Availability

Confluent Cloud Freight clusters are now Generally Available on AWS. In this blog, learn how Freight clusters can save you up to 90% at GBps+ scale.

Unleash Real-Time Agentic AI: Introducing Streaming Agents on Confluent Cloud

Build event-driven agents on Apache Flink® with Streaming Agents on Confluent Cloud—fresh context, MCP tool calling, real-time embeddings, and enterprise governance.

Monitoring Your Event Streams: Integrating Confluent with Prometheus and Grafana

Self-managing a highly scalable distributed system with Apache Kafka® at its core is not an easy feat. That’s why operators prefer tooling such as Confluent Control Center for administering and […]

How to Run Confluent on Windows in Minutes

I previously showed how to install and set up Apache Kafka® on Windows in minutes by using the Windows Subsystem for Linux 2 (WSL 2). From there, it’s only a […]

Integrating Azure and Confluent: Real-Time Search Powered by Azure Cache for Redis and Spring Cloud

Self-managing a distributed system like Apache Kafka®, along with building and operating Kafka connectors, is complex and resource intensive. It requires significant Kafka skills and expertise in the development and […]

Integrating Azure and Confluent: Ingesting Data to Azure Cosmos DB through Apache Kafka

Building cross-platform solutions enables organizations to leverage technology driven by real-time data and enabled with both highly available services and low-latency databases hosted on Microsoft Azure. Azure Cosmos DB is […]

Securing the Infrastructure of Confluent with HashiCorp Vault

In order for a technology like Confluent Cloud to make it easy to set data in motion, many different software systems are required to interact with each other using API […]

Simplifying Apache Kafka Multi-Cluster Management Using Control Center and Cluster Registry

Most companies who have adopted event streaming are running multiple Apache Kafka® environments. For example, they may use different Kafka clusters for testing vs. production or for different use cases. […]

How to Manage Secrets for Confluent with Kubernetes and HashiCorp Vault

This blog post walks through an end-to-end demo that uses the Confluent Operator to deploy Confluent Platform to Kubernetes. We will deploy a connector that watches for commits to a […]

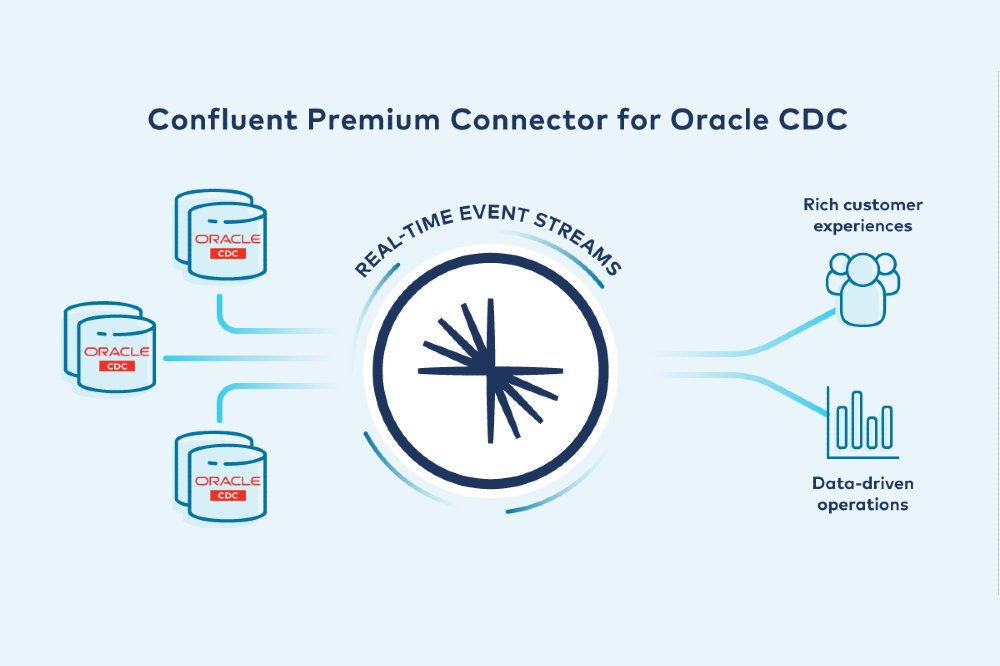

Oracle CDC Source Premium Connector is Now Generally Available

One of the most common relational database systems that connects to Apache Kafka® is Oracle, which often holds highly critical enterprise transaction workloads. While Oracle Database (DB) excels at many […]

Automatic Observer Promotion Brings Fast and Safe Multi-Datacenter Failover with Confluent Platform 6.1

Persisting data in multiple regions has become crucial for modern businesses: They need their mission-critical data to be protected from accidents and disasters. They can achieve this goal by running […]

Introducing Confluent Platform 6.1

We are pleased to announce the release of Confluent Platform 6.1. With this release, we are further simplifying management tasks for Apache Kafka® operators and providing even higher availability for […]

How to Consume Data from Apache Kafka Topics and Schema Registry with Confluent and Azure Databricks

How do you process IoT data, change data capture (CDC) data, or streaming data from sensors, applications, and sources in real time? Apache Kafka® and Apache Spark® are widely adopted […]

Event Streaming Across Networks and Corporate Firewalls Using PubNub and Confluent Platform

This year’s pandemic has forced businesses all around the world to adopt a “remote-first” approach to their operations, with an emphasis on better enabling collaboration, remote work, and productivity. This […]

Property Based Testing Confluent Cloud Storage for Fun and Safety

Confluent uses property-based testing to test various aspects of Confluent Cloud’s Tiered Storage feature. Tiered Storage shifts data from expensive local broker disks to cheaper, scalable object storage, thereby reducing […]

Putting Apache Kafka to REST: Confluent REST Proxy 6.0

Confluent Platform 6.0 was released last year bringing with it many exciting new features to Confluent REST Proxy. Before we dive into what was added, let’s first revisit what REST […]