Ahorra un 25 % (o incluso más) en tus costes de Kafka | Acepta el reto del ahorro con Kafka de Confluent

Apache Kafka

Data Products, Data Contracts, and Change Data Capture

Change data capture is a popular method to connect database tables to data streams, but it comes with drawbacks. The next evolution of the CDC pattern, first-class data products, provide resilient pipelines that support both real-time and batch processing while isolating upstream systems...

Unlock Cost Savings with Freight Clusters–Now in General Availability

Confluent Cloud Freight clusters are now Generally Available on AWS. In this blog, learn how Freight clusters can save you up to 90% at GBps+ scale.

Unleash Real-Time Agentic AI: Introducing Streaming Agents on Confluent Cloud

Build event-driven agents on Apache Flink® with Streaming Agents on Confluent Cloud—fresh context, MCP tool calling, real-time embeddings, and enterprise governance.

Improved Robustness and Usability of Exactly-Once Semantics in Apache Kafka

This blog post talks about the recent improvements on exactly-once semantics (EOS) to make it simpler to use and more resilient. EOS was first released in Apache Kafka® 0.11 and […]

Data Privacy, Security, and Compliance for Apache Kafka

Why data privacy for Apache Kafka®? As companies seek to leverage all forms of data for competitive advantage, there is a growing regulatory and reputational risk that calls for the […]

Spring for Apache Kafka – Beyond the Basics: Can Your Kafka Consumers Handle a Poison Pill?

You know the fundamentals of Apache Kafka®. You are a Spring Boot developer working with Apache Kafka or Confluent Cloud. You have chosen Spring for Apache Kafka for your integration. […]

The Cost of Apache Kafka: An Engineer's Guide to DIY Kafka Pricing

When I have a small software project that I want to share with the world, I don’t write my own version control system with a web UI. I don’t even […]

My Python/Java/Spring/Go/Whatever Client Won’t Connect to My Apache Kafka Cluster in Docker/AWS/My Brother’s Laptop. Please Help!

When a client wants to send or receive a message from Apache Kafka®, there are two types of connection that must succeed: The initial connection to a broker (the […]

Learning All About Wi-Fi Data with Apache Kafka and Friends

Recently, I’ve been looking at what’s possible with streams of Wi-Fi packet capture (pcap) data. I was prompted after initially setting up my Raspberry Pi to capture pcap data and […]

Apache Kafka Needs No Keeper: Removing the Apache ZooKeeper Dependency

Currently, Apache Kafka® uses Apache ZooKeeper™ to store its metadata. Data such as the location of partitions and the configuration of topics are stored outside of Kafka itself, in a […]

Webify Event Streams Using the Kafka Connect HTTP Sink Connector

The goal of this post is to illustrate PUSH to web from Apache Kafka® with a hands-on example. Our business users are always wanting their data faster so they can […]

What’s New in Apache Kafka 2.5

On behalf of the Apache Kafka® community, it is my pleasure to announce the release of Apache Kafka 2.5.0. The community has created another exciting release. We are making progress […]

How to Make Your Open Source Apache Kafka Connector Available on Confluent Hub

Do you have data you need to get into or out of Apache Kafka®? Kafka connectors are perfect for this. There are many connectors out there, usually for well-known and […]

Kafka Connect Elasticsearch Connector in Action

The Elasticsearch sink connector helps you integrate Apache Kafka® and Elasticsearch with minimum effort. You can take data you’ve stored in Kafka and stream it into Elasticsearch to then be […]

Introducing Confluent Developer

Today, I am pleased to announce the launch of Confluent Developer, the one and only portal for everything you need to get started with Apache Kafka®, Confluent Platform, and Confluent […]

99th Percentile Latency at Scale with Apache Kafka

Fraud detection, payment systems, and stock trading platforms are only a few of many Apache Kafka® use cases that require both fast and predictable delivery of data. For example, detecting […]

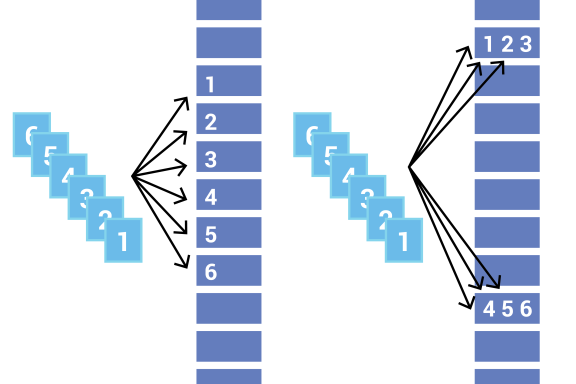

Apache Kafka Producer Improvements with the Sticky Partitioner

The amount of time it takes for a message to move through a system plays a big role in the performance of distributed systems like Apache Kafka®. In Kafka, the […]