Ahorra un 25 % (o incluso más) en tus costes de Kafka | Acepta el reto del ahorro con Kafka de Confluent

Celebrate Back-to-School Season With Data Streaming Basics

Back-to-school season brings the promise of learning new concepts, connecting with peers, and finding inspiration. For those of us in the world of tech, there’s always something new to learn. If you’re yearning for the days of freshly sharpened pencils and a new backpack, try this data streaming syllabus to expand your horizons. You’ll come away with a deeper understanding of the data streaming ecosystem, including:

- Fundamental concepts of Apache Kafka, ksqlDB, and streaming pipelines

- Developing trends in streaming architecture (Hello, data mesh!)

- How managed services are taking data streaming to a whole new level

- Real-life data streaming use cases that are fueling impressive outcomes

- How you can connect with a community of data streaming experts and enthusiasts

Let the learning begin!

What data streaming does, and why it’s important

When you’re getting started with data streaming concepts, get to know Apache Kafka first with Intro to Apache Kafka: How Kafka Works. Kafka is the foundational open-source technology behind data streaming (including Confluent Cloud’s managed service), and in this blog post you’ll find the basics of how Apache Kafka operates and an introduction to its primary concepts, including events, topics, partitions, producers and consumers, and more.

Next, check out Top 5 Things Every Apache Kafka Developer Should Know to get a sense of how to achieve better Kafka performance and get more details on the architectural concepts. From there, brush up on data system design with Introduction to Streaming Data Pipelines with Apache Kafka and ksqlDB. A data pipeline moves data from one system to another, and streaming pipelines put event streaming into action to bring accurate, relevant data to teams across a business while avoiding batch processing bottlenecks. Confluent’s ksqlDB acts as the processing layer for streaming pipelines and lets you perform operations on data before it goes to its targets.

Where data streaming fits into the bigger picture

Once you’ve got the basics down and a mental map of how Kafka works, see how it fits into the larger modern data industry and into your data architecture in particular. Kafka is part of the trend toward decentralized architectures that can enable better productivity and overall performance as teams and systems can work independently. In An Introduction to Data Mesh, take a look at the concept of a central nervous system driven by data streaming, also known as a data mesh. This approach to data and organizational management is centered around decentralization of data ownership, and involves four principles: data ownership by domain, data as a product, self-service data platform, and federated governance.

When and how a managed service comes into the picture

There are plenty of ways to get started using Apache Kafka across your organization. Once data streaming reaches mission-critical status, though, a managed service like Confluent Cloud can speed up deployment with features like fully managed connectors, infinite storage, and lots more. See the fundamentals of a managed data streaming service in A Walk to the Cloud, illustrated by friendly otters and a clouded leopard who take the leap from the wild Kafka river to the cloud.

Using data streaming in real life

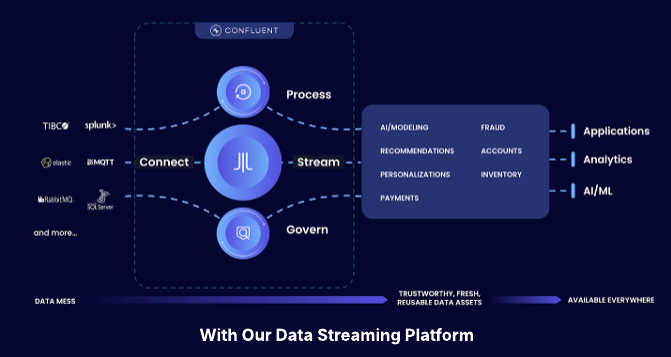

The use cases for event streaming or data streaming are nearly endless for modern businesses, all trying to stay ahead of the competition by anticipating what’s next and doing it cost-efficiently. Check out this Data Streaming 101 infographic to see how one retailer incorporated managed event streaming services so that inventory levels and customer orders stay in sync, and both back-end and front-end systems perform at their best.

Finding your fellow data streaming students

To start putting your knowledge into practice with some test cases, join the data streaming social media community in Succeeding at 100 Days Of Code for Apache Kafka. This project challenges you to take part in your own hands-on learning journey for at least an hour a day for 100 days. Stay connected with your fellow coders on Twitter, LinkedIn, or other preferred channels along the way to share your progress with #100DaysofCode and @apachekafka.

Start the 100 Days challenge at Confluent Developer to build your own self-directed Kafka learning path, and bring in other new resources as you go—whether getting to know microservices, event sourcing, or GraphQL databases or trying programming languages like Python, Go, or .NET.

What else do you want to learn about Kafka and data streaming? If you’re missing being in the classroom, join your fellow data-streaming-curious learners at Current 2022, the next generation of Kafka Summit, in early October. The full agenda is live now.

¿Te ha gustado esta publicación? Compártela ahora

Suscríbete al blog de Confluent

Confluent Recognized in 2025 Gartner® Magic Quadrant™ for Data Integration Tools

Confluent is recognized in the 2025 Gartner Data Integration Tools MQ. While valued for execution, we are running a different race. Learn how we are defining the data streaming platform category with our Apache Flink® service and Tableflow to power the modern real-time enterprise.

IBM to Acquire Confluent

We are excited to announce that Confluent has entered into a definitive agreement to be acquired by IBM.