Ahorra un 25 % (o incluso más) en tus costes de Kafka | Acepta el reto del ahorro con Kafka de Confluent

Author: Andrew Sellers

Report: Why IT Leaders Are Prioritizing Data Streaming Platforms

Announcing the launch of the 2025 Data Streaming Report—highlighting some of the key findings from the report, including data streaming platform’s role in driving AI success.

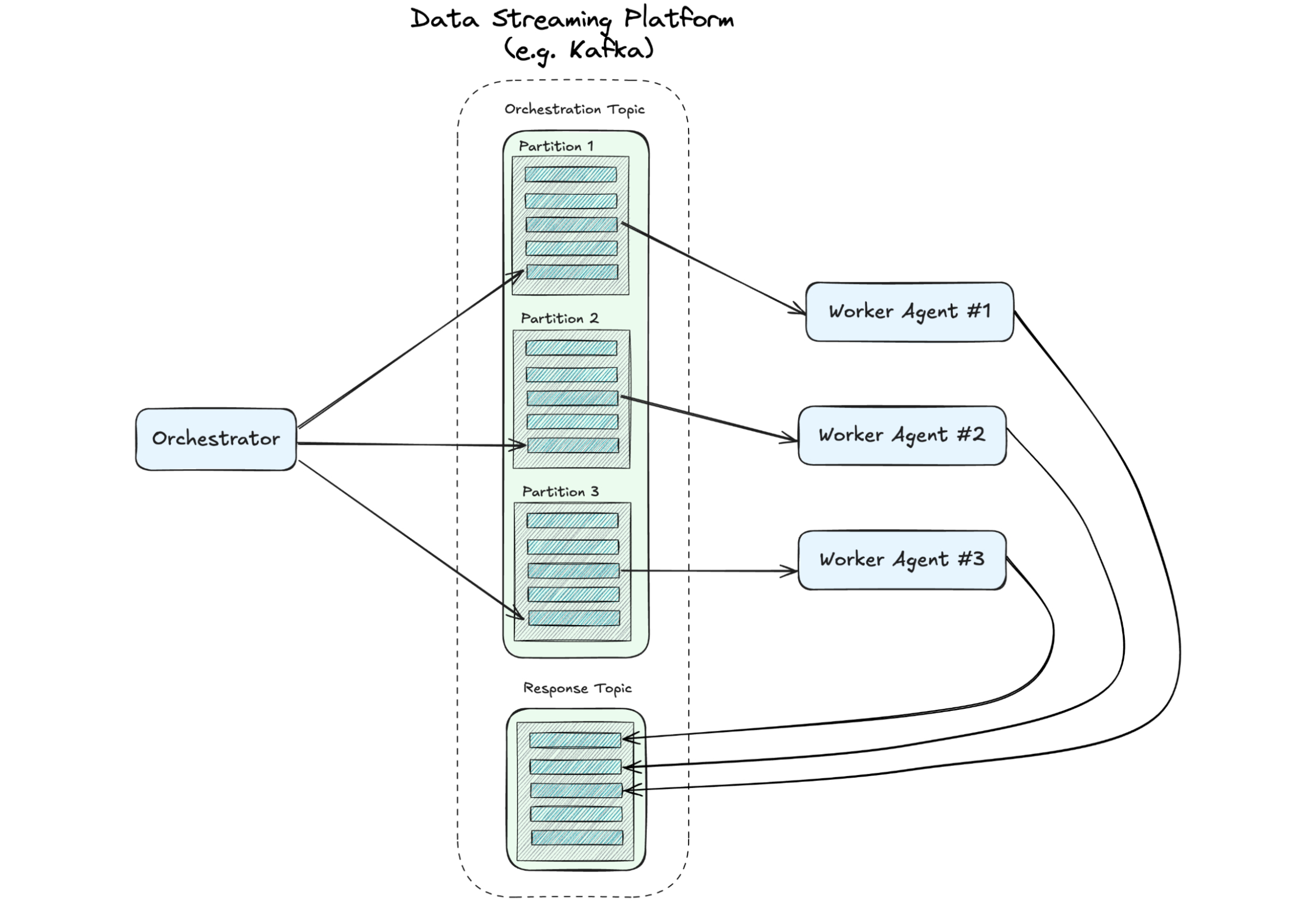

A Distributed State of Mind: Event-Driven Multi-Agent Systems

This article explores how event-driven design—a proven approach in microservices—can address the chaos, creating scalable, efficient multi-agent systems. If you’re leading teams toward the future of AI, understanding these patterns is critical. We’ll demonstrate how they can be implemented.

Three AI Trends Developers Need to Know in 2025

Continuing issues with hallucinations, the increasing independence of agentic AI systems, and the greater usage of dynamic data sources, are three AI trends you may want to monitor in 2025.

How Developers Can Use Generative AI to Improve Data Quality

While generative AI is driving the need for stronger data governance, it can also help to meet that need.

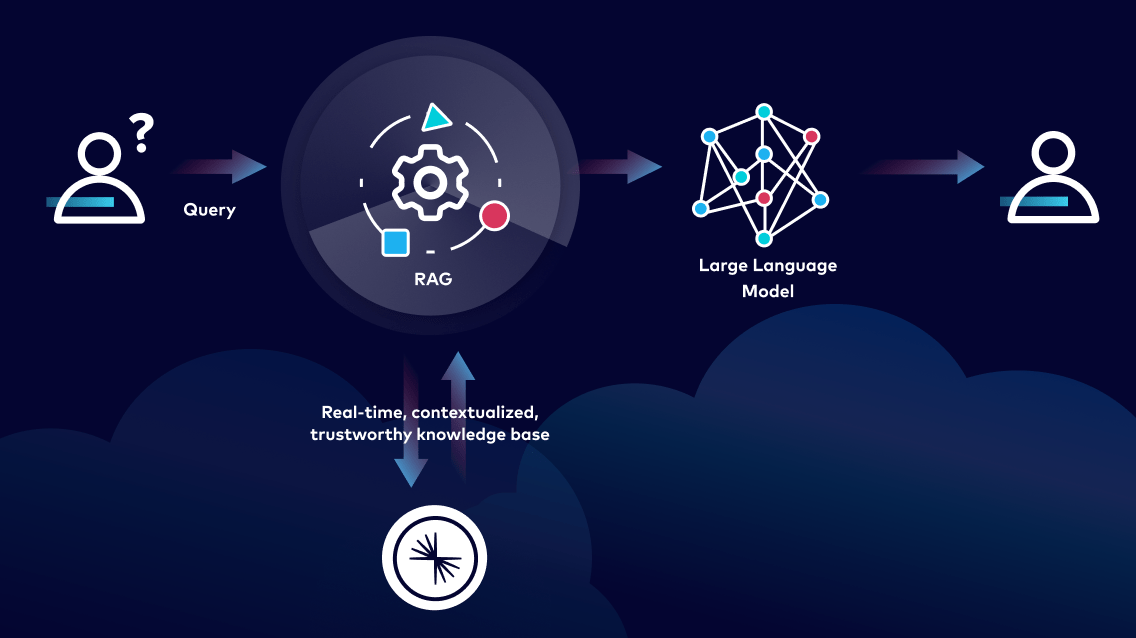

How to Scale RAG and Build More Accurate LLMs

RAG must be implemented in a way that provides accurate and up-to-date information and in a governed manner that can be scaled across apps and teams.

2024 Data Streaming Report: Powering AI, Data Product Adoption, and More

In the past, technology served as a supportive function for business. Over time, it has become the business itself. A similar shift is happening with data streaming—data streaming is now a critical foundation of modern business. And this year is an inflection point for data streaming platforms

4 Steps for Building Event-Driven GenAI Applications

I’ve worked with artificial intelligence for nearly 20 years, applying technologies spanning predictive modeling, knowledge engineering, and symbolic reasoning. AI’s tremendous potential has always felt evident, but its widespread application always seemed to be just a few more years away.

Enterprise Apache Kafka Cluster Strategies: Insights and Best Practices

As companies increase their use of real-time data, we have seen the proliferation of Kafka clusters within many enterprises. Often, siloed application and infrastructure teams set up and manage new clusters to solve new use cases as they arise. In many large, complex enterprises, this organic growth

Your AI Data Problems Just Got Easier with Data Streaming for AI

While the promise of AI has been around for years, there’s been a resurgence thanks to breakthroughs across reusable large language models (LLMs), more accessible machine learning models, more data than ever, and more powerful GPU capabilities. This has sparked organizations to accelerate their AI

Join the Excitement at Current 2023: Unmissable Keynotes and 5 Must-Attend Sessions

Today, use of data streaming technologies has become table stakes for businesses. But with data streaming technologies, patterns, and best practices continuing to mature, it’s imperative for businesses to stay on top of what’s new and next in the world of data streaming.

Real-Time AI: Live Recommendations Using Confluent and Rockset

Real-time AI is the future, and AI/ML models have demonstrated incredible potential for predicting and generating media in various business domains. For the best results, these models must be informed by relevant data.

New Report: The ROI of Data Streaming and Its Biggest Challenges

This year, we crossed an important threshold: data streaming is now considered a business requirement for organizations across many industries. Findings from the 2023 Data Streaming Report show that 72% of the 2,250 IT leaders surveyed are using data streaming to power mission-critical systems.

Real-Time or Real Value? Assessing the Benefits of Event Streaming

Experienced technology leaders know that adopting a new technology can be risky. Often, we are unable to distinguish between those investments that will be transformational and those that won’t be worthwhile. This post examines how one can decide if event streaming makes sense for them.

Succeeding with Change Data Capture

Change data capture (CDC) converts all the changes that occur inside your database into events and publishes them to an event stream. You can then use these events to power analytics, drive operational use cases, hydrate databases, and more. The pattern is enjoying wider adoption than ever before.