Ahorra un 25 % (o incluso más) en tus costes de Kafka | Acepta el reto del ahorro con Kafka de Confluent

Technology

Data Products, Data Contracts, and Change Data Capture

Change data capture is a popular method to connect database tables to data streams, but it comes with drawbacks. The next evolution of the CDC pattern, first-class data products, provide resilient pipelines that support both real-time and batch processing while isolating upstream systems...

Unlock Cost Savings with Freight Clusters–Now in General Availability

Confluent Cloud Freight clusters are now Generally Available on AWS. In this blog, learn how Freight clusters can save you up to 90% at GBps+ scale.

Unleash Real-Time Agentic AI: Introducing Streaming Agents on Confluent Cloud

Build event-driven agents on Apache Flink® with Streaming Agents on Confluent Cloud—fresh context, MCP tool calling, real-time embeddings, and enterprise governance.

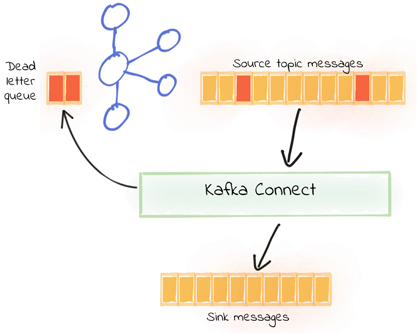

Kafka Connect Deep Dive – Error Handling and Dead Letter Queues

Kafka Connect is part of Apache Kafka® and is a powerful framework for building streaming pipelines between Kafka and other technologies. It can be used for streaming data into Kafka […]

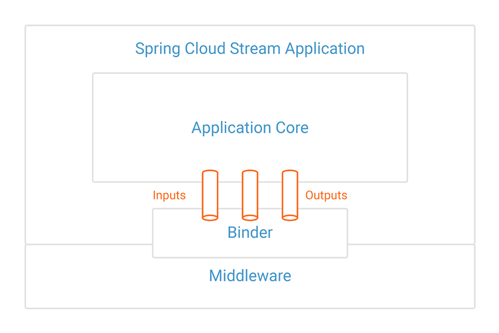

Spring for Apache Kafka Deep Dive – Part 2: Apache Kafka and Spring Cloud Stream

On the heels of part 1 in this blog series, Spring for Apache Kafka – Part 1: Error Handling, Message Conversion and Transaction Support, here in part 2 we’ll focus […]

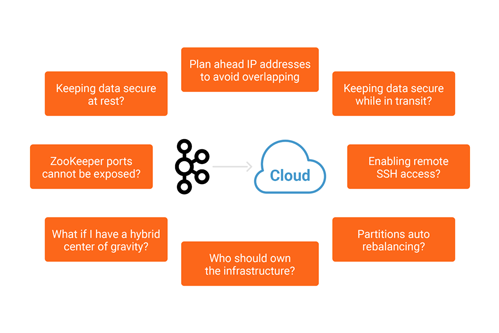

CloudBank’s Journey from Mainframe to Streaming with Confluent Cloud

Cloud is one of the key drivers for innovation. Innovative companies experiment with data to come up with something useful. It usually starts with the opening of a firehose that […]

All About the Kafka Connect Neo4j Sink Plugin

Only a little more than one month after the first release, we are happy to announce another milestone for our Kafka integration. Today, you can grab the Kafka Connect Neo4j […]

Journey to Event Driven – Part 3: The Affinity Between Events, Streams and Serverless

With serverless being all the rage, it brings with it a tidal change of innovation. Given that it is at a relatively early stage, developers are still trying to grok […]

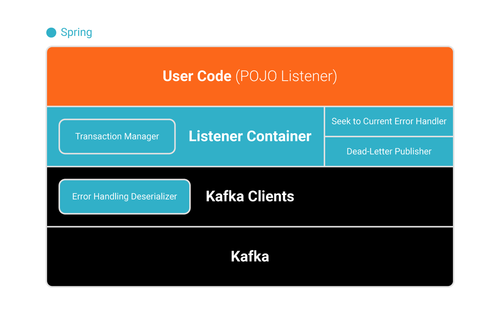

Spring for Apache Kafka Deep Dive – Part 1: Error Handling, Message Conversion and Transaction Support

Following on from How to Work with Apache Kafka in Your Spring Boot Application, which shows how to get started with Spring Boot and Apache Kafka®, here we’ll dig a […]

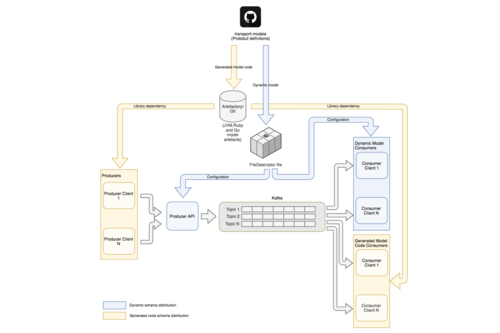

Improving Stream Data Quality with Protobuf Schema Validation

The requirements for fast and reliable data pipelines are growing quickly at Deliveroo as the business continues to grow and innovate. We have delivered an event streaming platform which gives […]

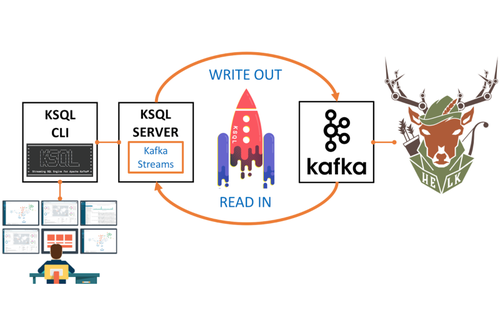

Sysmon Security Event Processing in Real Time with KSQL and HELK

During a recent talk titled Hunters ATT&CKing with the Right Data, which I presented with my brother Jose Luis Rodriguez at ATT&CKcon, we talked about the importance of documenting and […]

Journey to Event Driven – Part 2: Programming Models for the Event-Driven Architecture

Part 1 of our series on event-driven architecture discussed why you need to embrace event-first thinking, while this article builds a rationale for different styles of event-driven architectures and compares […]

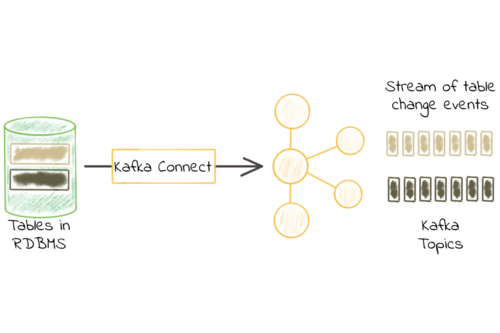

Kafka Connect Deep Dive – JDBC Source Connector

One of the most common integrations that people want to do with Apache Kafka® is getting data in from a database. That is because relational databases are a rich source […]

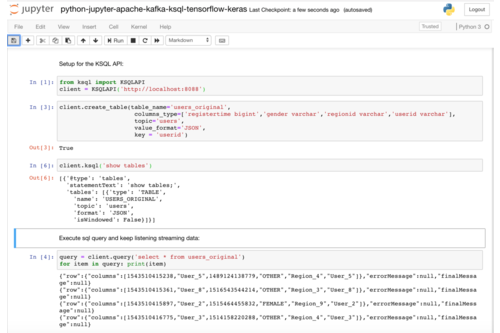

Machine Learning with Python, Jupyter, KSQL and TensorFlow

Building a scalable, reliable and performant machine learning (ML) infrastructure is not easy. It takes much more effort than just building an analytic model with Python and your favorite machine […]

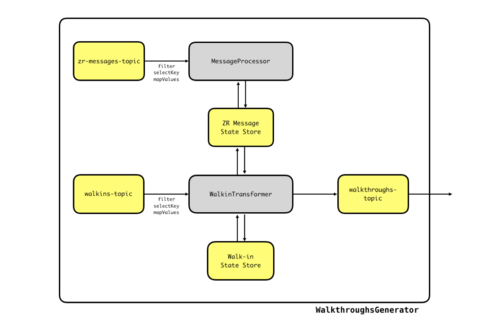

A Beginner’s Perspective on Kafka Streams: Building Real-Time Walkthrough Detection

Here at Zenreach, we create products to enable brick-and-mortar merchants to better understand, engage and serve their customers. Many of these products rely on our capability to quickly and reliably […]

Journey to Event Driven – Part 1: Why Event-First Programming Changes Everything

The world is changing. New problems need to be solved. Companies now run global businesses that span the globe and hop between clouds in real-time, breaking down data silos to […]

Using Apache Kafka Command Line Tools with Confluent Cloud

If you have been using Apache Kafka® for a while, it is likely that you have developed a degree of confidence in the command line tools that come with it. […]