Ahorra un 25 % (o incluso más) en tus costes de Kafka | Acepta el reto del ahorro con Kafka de Confluent

Technology

Data Products, Data Contracts, and Change Data Capture

Change data capture is a popular method to connect database tables to data streams, but it comes with drawbacks. The next evolution of the CDC pattern, first-class data products, provide resilient pipelines that support both real-time and batch processing while isolating upstream systems...

Unlock Cost Savings with Freight Clusters–Now in General Availability

Confluent Cloud Freight clusters are now Generally Available on AWS. In this blog, learn how Freight clusters can save you up to 90% at GBps+ scale.

Unleash Real-Time Agentic AI: Introducing Streaming Agents on Confluent Cloud

Build event-driven agents on Apache Flink® with Streaming Agents on Confluent Cloud—fresh context, MCP tool calling, real-time embeddings, and enterprise governance.

Leveraging Confluent Cloud Schema Registry with AWS Lambda Event Source Mapping

AWS Lambda's Kafka Event Source Mapping now supports Confluent Schema Registry. This update simplifies building event-driven applications by eliminating the need for custom code to deserialize Avro/Protobuf data. The integration makes it easier and more efficient to leverage Confluent Cloud.

Cross-Cloud Data Replication Over Private Networks With Confluent

Confluent’s Cluster Linking enables fully managed, offset-preserving Kafka replication across clouds. It supports public and private networking, enabling use cases like disaster recovery, data sharing, and analytics across AWS, Azure, Google Cloud, and on-premises clusters.

Monitor Kafka Streams Health Metrics in Confluent Cloud

Confluent Cloud now offers native Kafka Streams health monitoring to simplify troubleshooting. The new UI provides at-a-glance application state, performance ratios to pinpoint bottlenecks (code vs. cluster), and state store metrics.

Beyond Compliance: Confluent's Commitment to Trust and Transparency

Confluent is providing our customers and prospects with a full package to build trust and innovate securely with Confluent. With our technical documentation, foundational principles and a new level of transparency.

No More Swamps: Building a Better-Governed Data Lake Architecture

Powering analytics and AI requires reliable, consistent, and easily discoverable data to reach the data lake. To enforce these needs, strong and holistic governance is an important of building better platforms for getting from raw data to valuable insights and actions.

Scaling Kafka Streams Applications: Strategies for High-Volume Traffic

Learn how to scale Kafka Streams applications to handle massive throughput with partitioning, scaling strategies, tuning, and monitoring.

Cross-Data-Center Apache Kafka® Replication: Decision Framework & Readiness Playbook

Learn how to choose the right Apache Kafka® multi-cluster replication pattern and run an audit-ready disaster recovery and high availability program with lag SLOs, drills, and drift control.

How to Transform Data and Manage Schemas in Kafka Connect Pipelines

Learn how to handle data transformation, schema evolution, and security in Kafka Connect with best practices for consistency, enrichment, and format conversions.

Best Practices for Validating Apache Kafka® Disaster Recovery and High Availability

Learn best practices for validating your Apache Kafka® disaster recovery and high availability strategies, using techniques like chaos testing, monitoring, and documented recovery playbooks.

How to Scale and Secure Kafka Connect in Production Environments

Learn best practices for running Kafka Connect in production—covering scaling, security, error handling, and monitoring to build resilient data integration pipelines.

The BI Lag Problem and How Event-Driven Workflows Solve It

Learn how to automate BI with real-time streaming. Explore event-driven workflows that deliver instant insights and close the gap between data and action.

How Does Real-Time Streaming Prevent Fraud in Banking and Payments?

Discover how banks and payment providers use Apache Kafka® streaming to detect and block fraud in real time. Learn patterns for anomaly detection, risk mitigation, and trusted automation.

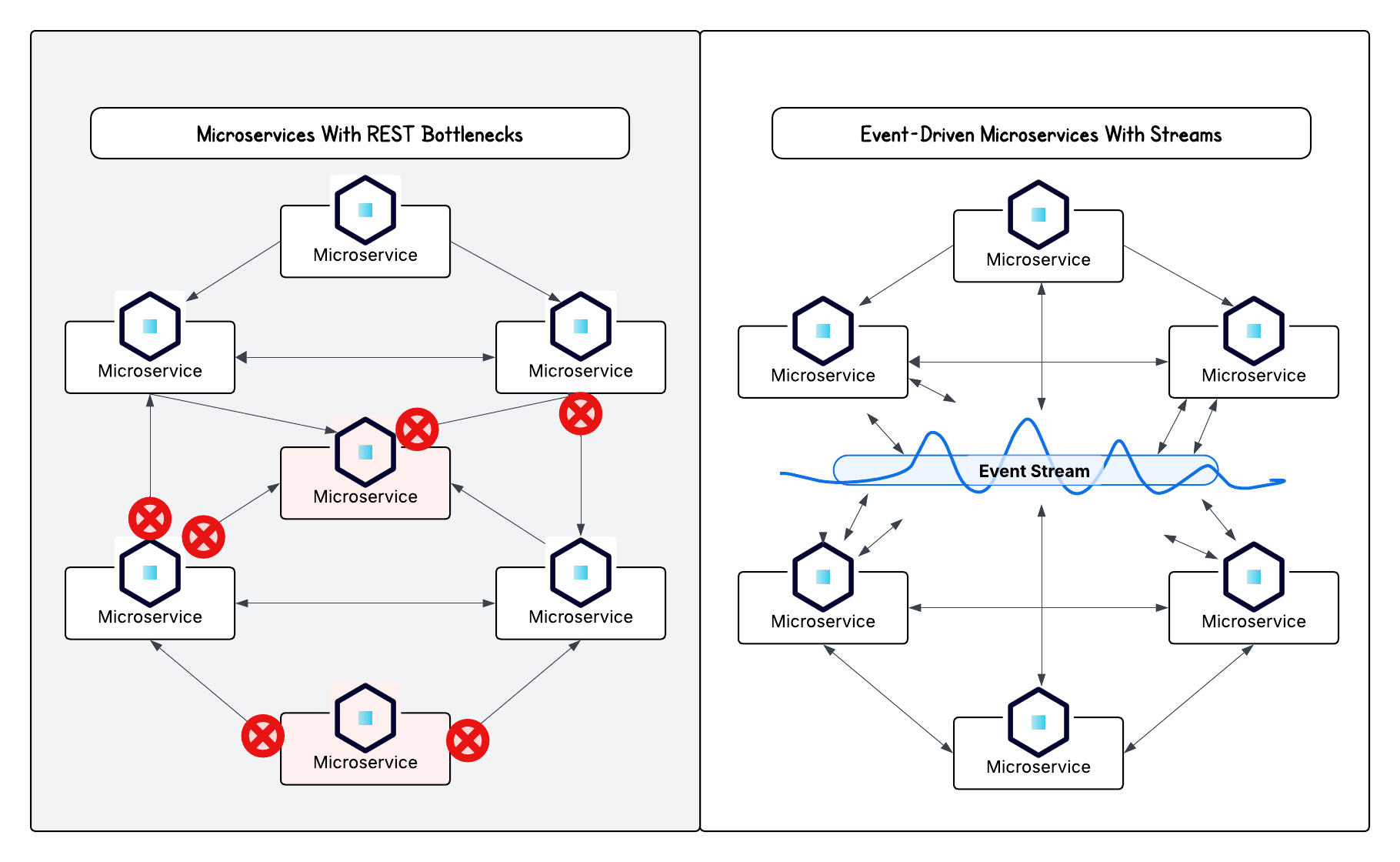

Do Microservices Need Event-Driven Architectures?

Discover why microservices architectures thrive with event-driven design and how streaming powers applications that are agile, resilient, and responsive in real time.

Making Data Quality Scalable With Real-Time Streaming Architectures

Learn how to validate and monitor data quality in real time with Apache Kafka® and Confluent. Prevent bad data from entering pipelines, improve trust in analytics, and power reliable business decisions.