Ahorra un 25 % (o incluso más) en tus costes de Kafka | Acepta el reto del ahorro con Kafka de Confluent

Confluent Blog

Data Products, Data Contracts, and Change Data Capture

Change data capture is a popular method to connect database tables to data streams, but it comes with drawbacks. The next evolution of the CDC pattern, first-class data products, provide resilient pipelines that support both real-time and batch processing while isolating upstream systems...

Unlock Cost Savings with Freight Clusters–Now in General Availability

Confluent Cloud Freight clusters are now Generally Available on AWS. In this blog, learn how Freight clusters can save you up to 90% at GBps+ scale.

Unleash Real-Time Agentic AI: Introducing Streaming Agents on Confluent Cloud

Build event-driven agents on Apache Flink® with Streaming Agents on Confluent Cloud—fresh context, MCP tool calling, real-time embeddings, and enterprise governance.

Streams and Tables in Apache Kafka: Elasticity, Fault Tolerance, and Other Advanced Concepts

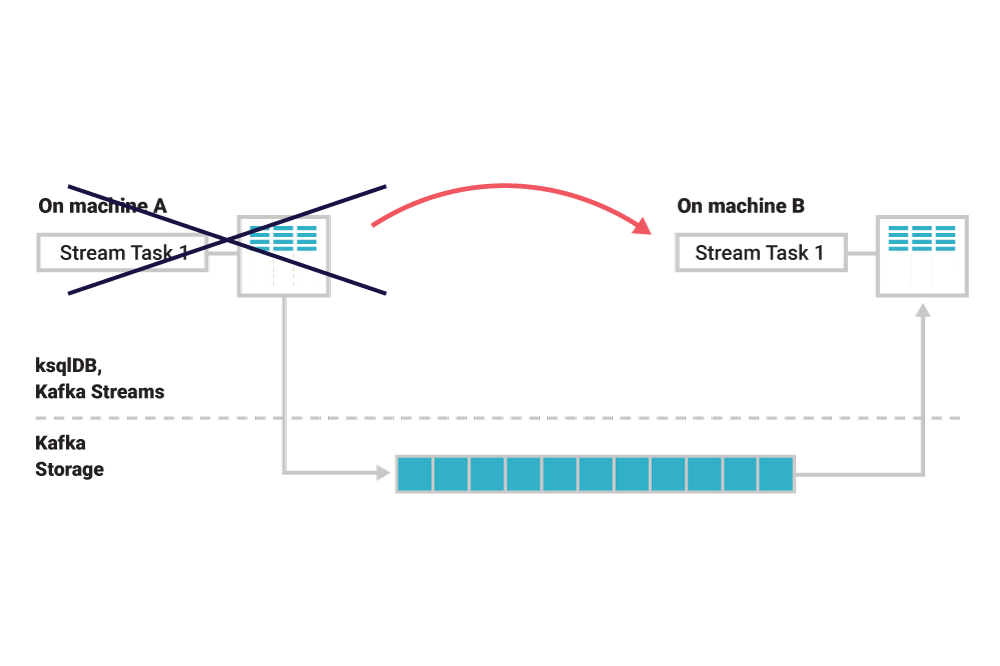

Now that we’ve learned about the processing layer of Apache Kafka® by looking at streams and tables, as well as the architecture of distributed processing with the Kafka Streams API […]

Streams and Tables in Apache Kafka: Processing Fundamentals with Kafka Streams and ksqlDB

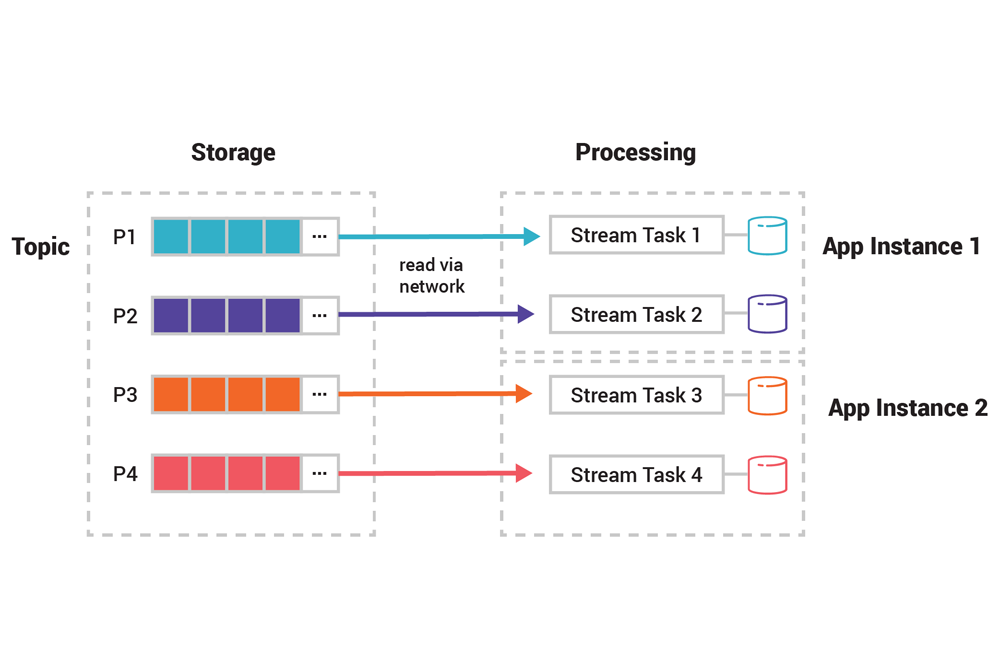

Part 2 of this series discussed in detail the storage layer of Apache Kafka: topics, partitions, and brokers, along with storage formats and event partitioning. Now that we have this […]

Streams and Tables in Apache Kafka: Topics, Partitions, and Storage Fundamentals

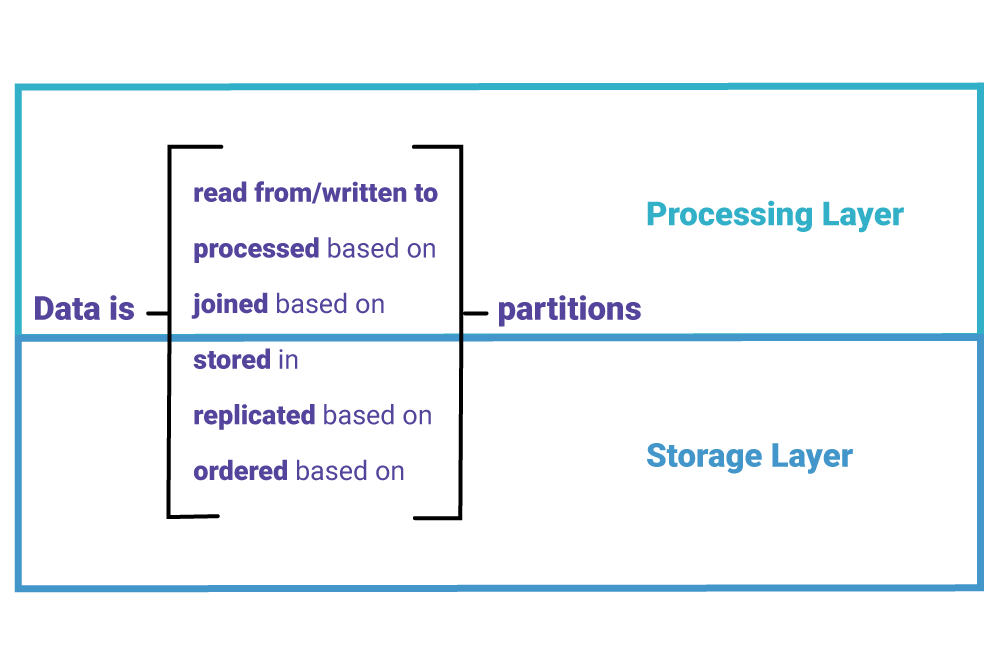

Part 1 of this series discussed the basic elements of an event streaming platform: events, streams, and tables. We also introduced the stream-table duality and learned why it is a […]

Streams and Tables in Apache Kafka: A Primer

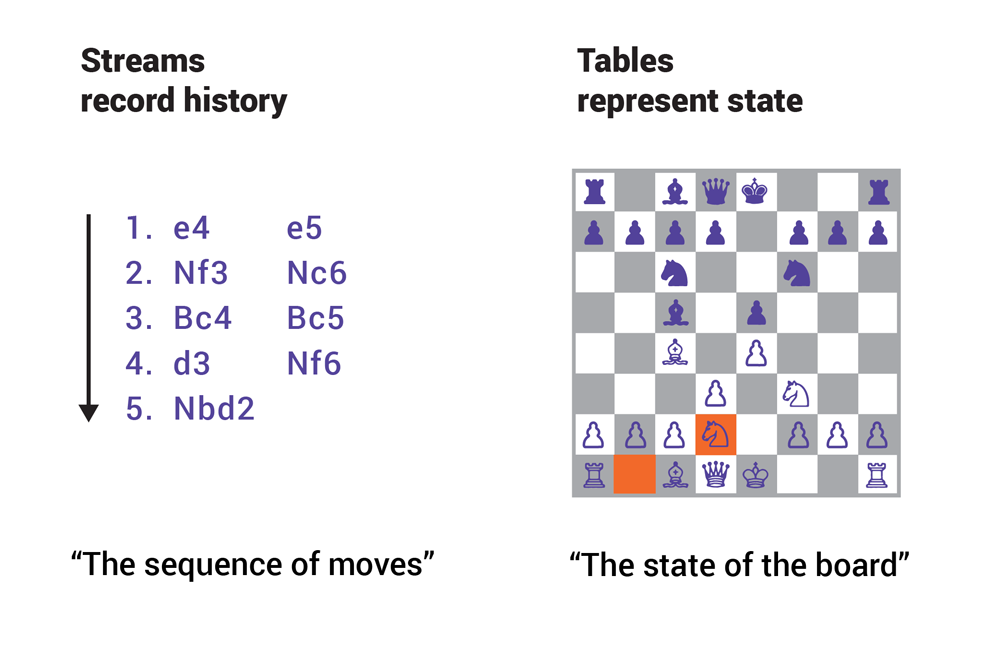

This four-part series explores the core fundamentals of Kafka’s storage and processing layers and how they interrelate. In this first part, we begin with an overview of events, streams, tables, […]

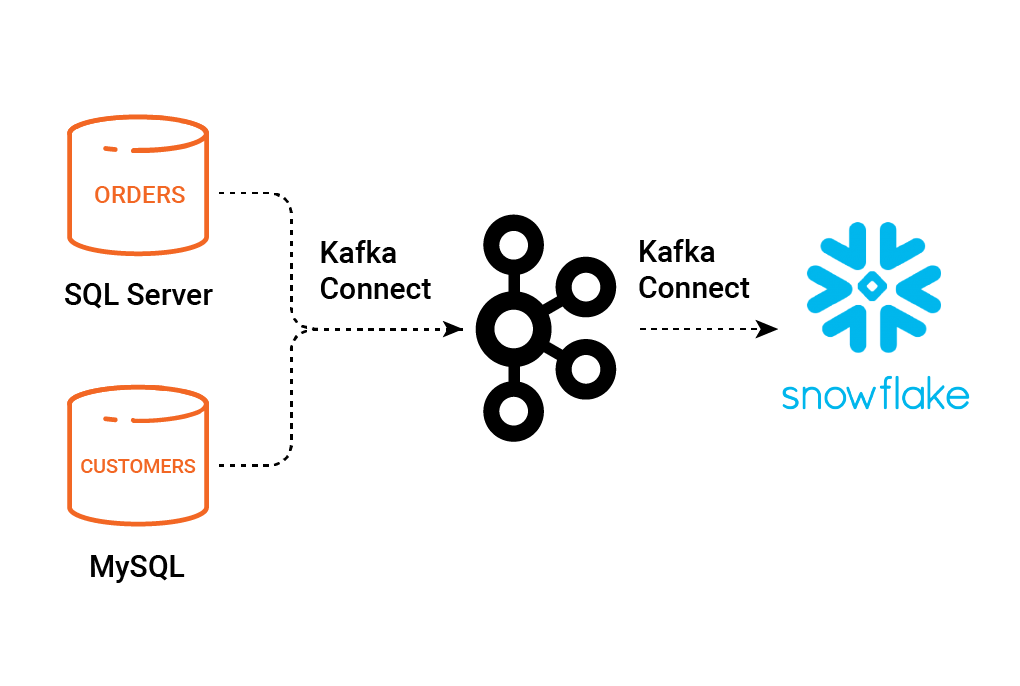

Pipeline to the Cloud – Streaming On-Premises Data for Cloud Analytics

This article shows how you can offload data from on-premises transactional (OLTP) databases to cloud-based datastores, including Snowflake and Amazon S3 with Athena. I’m also going to take the opportunity […]

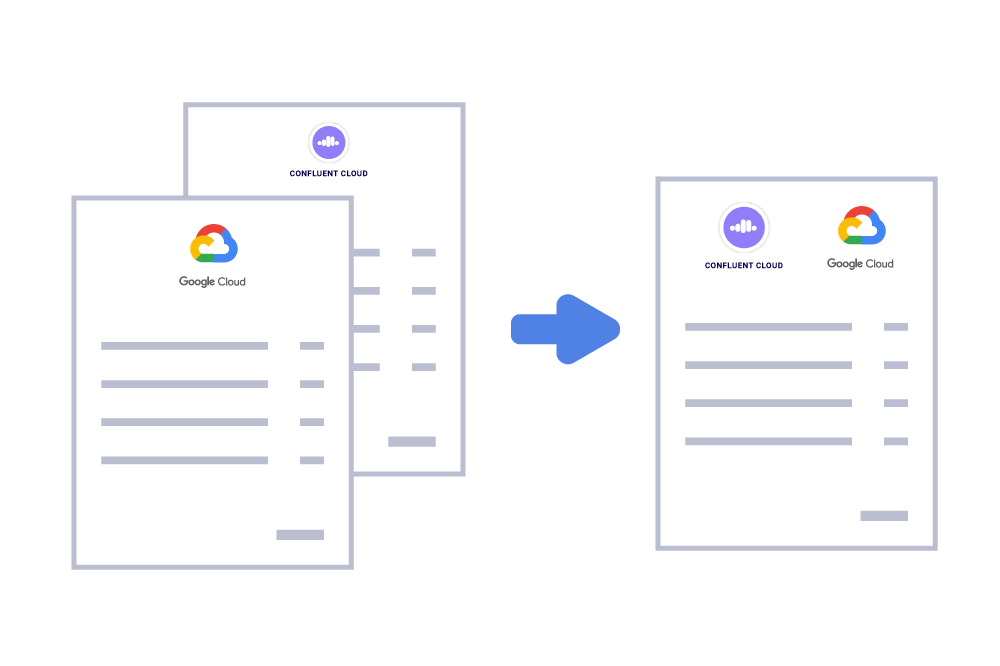

Apache Kafka as a Service with Confluent Cloud Now Available on GCP Marketplace

Following Google’s announcement to provide leading open source services with a cloud-native experience by partnering with companies like Confluent, we are delighted to share that Confluent Cloud is now available […]

Celebrating 1,000 Employees and Looking Towards the Path Ahead

During the holiday season, it’s a particularly relevant time to pause, reflect, and celebrate, both the days past and those ahead. Here at Confluent, it’s a noticeably nostalgic moment, given […]

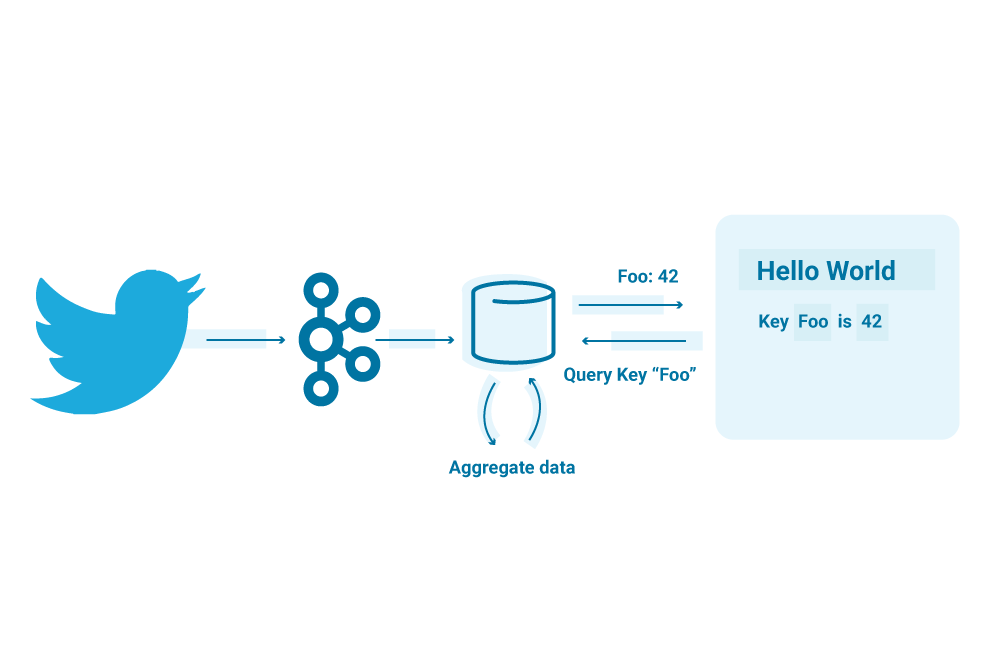

Exploring ksqlDB with Twitter Data

When KSQL was released, my first blog post about it showed how to use KSQL with Twitter data. Two years later, its successor ksqlDB was born, which we announced this […]

The Easiest Way to Install Apache Kafka and Confluent Platform – Using Ansible

With Confluent Platform 5.3, we are actively embracing the rising DevOps movement by introducing CP-Ansible, our very own open source Ansible playbooks for deployment of Apache Kafka® and the Confluent […]

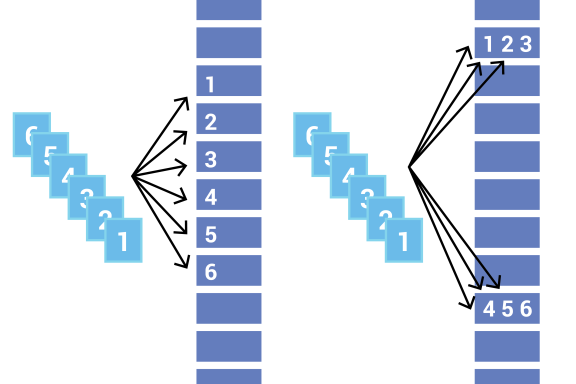

Apache Kafka Producer Improvements with the Sticky Partitioner

The amount of time it takes for a message to move through a system plays a big role in the performance of distributed systems like Apache Kafka®. In Kafka, the […]

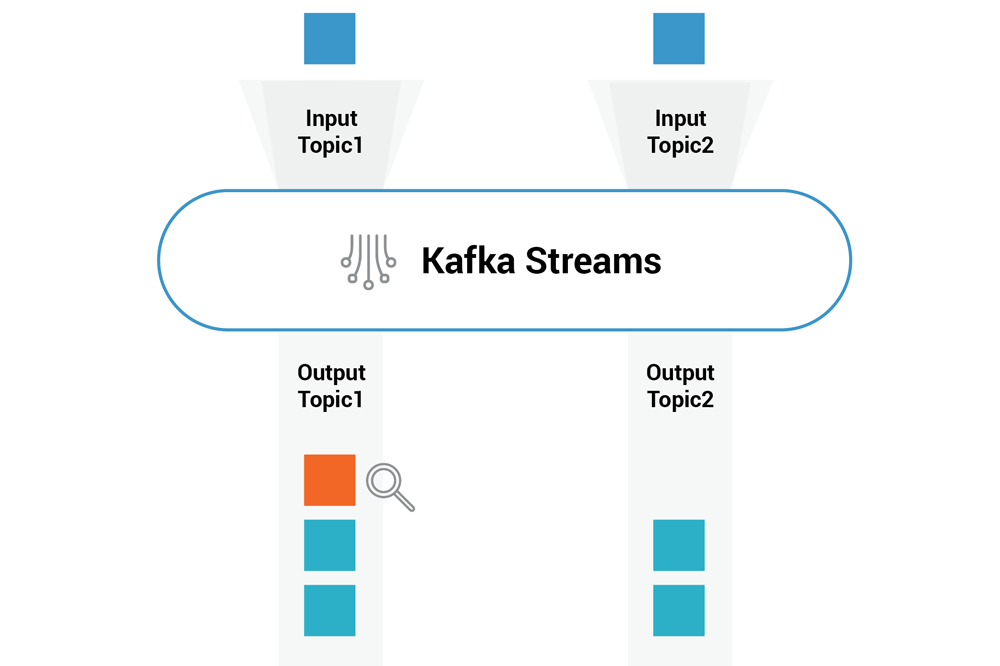

Testing Kafka Streams Using TestInputTopic and TestOutputTopic

As a test class that allows you to test Kafka Streams logic, TopologyTestDriver is a lot faster than utilizing EmbeddedSingleNodeKafkaCluster and makes it possible to simulate different timing scenarios. Not […]

What’s New in Apache Kafka 2.4

On behalf of the Apache Kafka® community, it is my pleasure to announce the release of Apache Kafka 2.4.0. This release includes a number of key new features and improvements […]

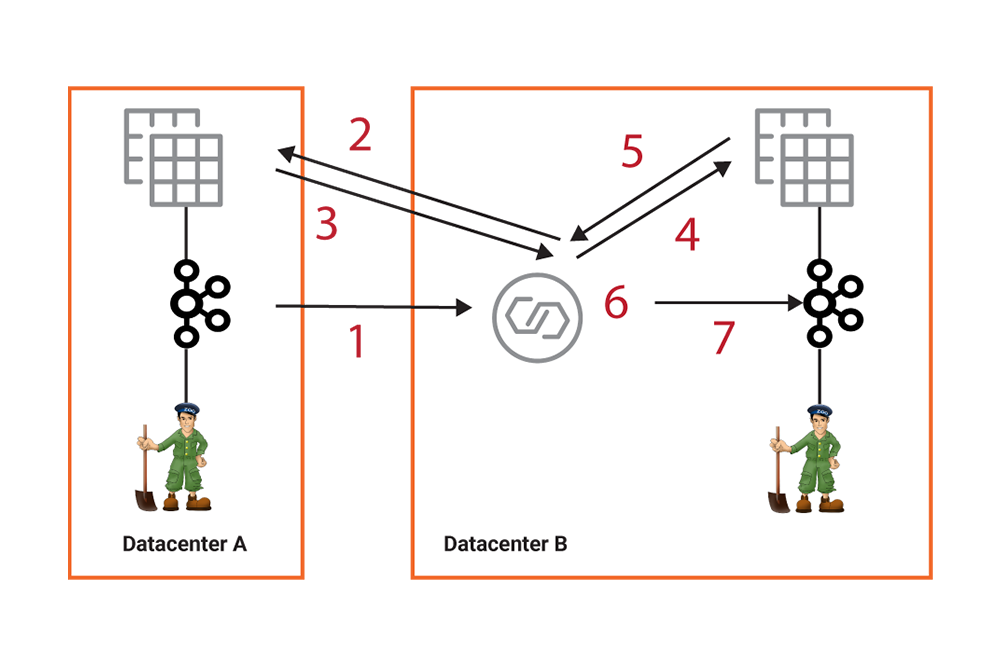

Transferring Avro Schemas Across Schema Registries with Kafka Connect

Although starting out with one Confluent Schema Registry deployment per development environment is straightforward, over time, a company may scale and begin migrating data to a cloud environment (such as […]

Integrating Apache Kafka With Python Asyncio Web Applications

Modern Python has very good support for cooperative multitasking. Coroutines were first added to the language in version 2.5 with PEP 342 and their use is becoming mainstream following the […]